How AI Coding Tools Might Set You Up for Failure

AI coding tools can enhance business efficiency, but are they hiding risks that could set you up for failure? Discover the pros and cons of AI-assisted coding and learn how to use them wisely!

Hello everyone, and welcome back to another episode of Tech Trendsetters! As always, we're here to explore the cutting-edge technologies and groundbreaking trends that are shaping industries we’re working at and driving innovation across the business landscape.

Today, we're diving into a topic that may be a very lucrative idea: leveraging AI as a code generator tools. If you've been keeping an eye on my recent posts, you may have noticed that I'm particularly bullish on the possibilities AI offers and its potential for the future. In this episode, I want to discuss how AI coding tools can help drive your business forward. However, I'll also explore the flip side – how they might slow you down and lead to less than optimal outcomes if not used properly.

Whether you're a seasoned engineer or a business owner looking to streamline your processes, this episode will provide valuable information to help you to stay informed of AI-assisted coding. So, let's get started!

The Rise of AI Coding Assistants

First I want to talk about positives, a bright side of the story.

In recent years, the tech industry has witnessed a surge in the number of AI-powered coding assistance tools. The market is growing at an unprecedented pace, with new companies and offerings emerging almost every day. As a result, it's easy to find yourself lost in the sea of options available. However, among this chaos, there are a few major players that stand out, such as:

GitHub Copilot;

Tabnine;

Amazon CodeWhisperer.

I remember when it all started, and many software engineers began discussing how quickly they would lose their jobs to these AI-powered tools. It's a valid concern, given the impressive capabilities of these assistants. However, I believe that rather than replacing developers, these tools will augment their abilities and help them become more efficient and productive.

Most articles about coding assistants on the internet emphasize a few shared ideas:

Firstly, they highlight the potential for these tools to improve code quality. Copilot, for example, provides recommendations and code suggestions based on the provided context, helping you prevent errors and adhere to best coding practices. They state that by leveraging the vast knowledge base of code repositories and the power of machine learning, these assistants can guide you towards writing cleaner, more efficient, and more maintainable code.

Secondly, these tools promise to increase productivity. It’s expected that developers can focus on more creative and strategic tasks instead of wasting time on mundane work. Like having an AI-powered pair programmer by your side, suggesting the most appropriate code snippets, functions, and libraries based on your specific needs. This can save hours of searching through documentation and online forums, allowing engineers to concentrate on the bigger picture and the more challenging aspects of a project.

Finally, AI coding assistants can be incredible tools for learning and growth. For newbies just starting their programming journey, studying the recommendations and generated code can provide valuable insights into best practices, design patterns, and problem-solving techniques. By observing how the AI suggests tackling specific coding challenges, beginners can quickly expand their knowledge and gain practical experience.

In shorter terms, AI coding assistants help software developers write, test, and learn code more productively. At least, that's the hypothesis based on what I've seen and experienced so far.

From a business perspective, the potential benefits of AI coding assistants should be significant. By improving code quality and increasing developer productivity, these tools promise to help organizations reduce development costs, speed up time-to-market, and deliver more reliable software products.

The only question is: can we trust these promises?

Insights from Industry Surveys

First, let's talk about the real, measurable impact these AI coding assistants can have on your organization. Let's begin with some statistics.

First of all, software developers are among the most likely individuals to use AI in professional settings. As AI becomes more integrated into the economy, tracking how developers utilize and perceive AI is becoming increasingly important.

Stack Overflow, a question-and-answer website for computer programmers, conducts an annual survey of computer developers. The 2023 survey, with responses from over 90,000 developers, included, for the first time, questions on AI tool usage – detailing how developers use these tools, which tools are favored, and their perceptions of the tools used.

A significant majority of respondents, 82.6%, regularly use AI for code writing, followed by 48.9% for debugging and assistance, and 34.4% for documentation. While only 23.9% currently use AI for code testing, 55.2% express interest in adopting AI for this purpose. This data suggests that AI is not only being used extensively for code generation but also has the potential to significantly improve other aspects of the development process, such as testing and documentation.

As GitHub states on their website – since the launch of GitHub Copilot in June 2022, more than 27% of developers' code files on average were generated by GitHub Copilot. Today, GitHub Copilot is behind an average of 46% of a developers' code across all programming languages – and in Java, that number jumps to a crazy 61%. These statistics alone demonstrate the significant role AI is already playing in the development process.

When asked about the primary advantages of AI tools in professional development, developers responded with increased productivity, accelerated learning and enhanced efficiency. These benefits may directly translate to organizational value, as they enable developers to potentially deliver high-quality code faster, reduce the time spent on repetitive tasks, and continuously improve their skills.

The part I personally like is the particularly positive sentiments developers have toward AI tools. A significant majority of developers hold a positive view of AI tools, with 42.2% reporting high or moderate trust in these technologies. Only 3.2% express unfavorable opinions about AI development tools.

With that being said, over the last five years, the growing integration of AI into the economy has sparked hopes of boosted productivity. However, finding reliable data confirming AI's impact on productivity has been difficult. Despite this, the level of trust and adoption software developers have in AI tools is truly remarkable.

The Dangers of Over-Reliance on AI Coding Assistants

This is where we pivot from a bright side to a less optimistic.

No doubts, AI coding assistants like GitHub Copilot, Amazon CodeWhisperer and others offer tremendous potential for improving code quality, increasing productivity, and facilitating learning. But as per my understanding, it's crucial to approach them with a balanced perspective. Over-reliance on these tools can lead to several pitfalls that may halt not only your personal success but also your entire organization's long-term success.

One significant concern is the quality of the generated code. As impressive as these AI assistants may be, they are not infallible. The code they produce is based on statistical patterns and correlations derived from entire-world repositories of existing code. This approach has its limitations.

I found multiple studies, including the initial OpenAI paper on Codex (the language model powering Copilot) – all of them reveal that the correct valid answer for a coding completion is provided only 29% of the time. While I'm confident that models have improved since the moment of first publication, as of today, the generated code often lacks proper refactoring, fails to leverage optimal solutions, and may not adhere to the latest best practices or language features. In essence, the generated code always requires a strong and thorough review.

It's important to understand that language models like Codex are trained to mimic the average programmer's coding style. They don't inherently possess a sense of correctness or quality, and most importantly, they lack a strong reasoning – the foundation of software development.

The vast majority of code on GitHub, while valuable, is relatively old and written by developers with varying skill levels. Of course, there are brilliant repositories that contribute to the training data, but let's be honest – most of the openly available data is far from ideal standards. And the data is the foundation of the AI coding assistants. Consequently, the code generated by these assistants may not always align with your best intentions.

From my own experience and based on many real-world feedbacks, many AI-coding tools lack the real-world connection and reasoning, as mentioned above. They rely solely on patterns and correlations, without considering edge cases or ensuring that the code actually achieves its intended purpose. This absence of real-world context can and will introduce bugs, vulnerabilities, and technical debt into your codebase.

Another significant challenge lies in the maintenance and debugging of AI-generated code. While these tools can rapidly produce substantial amounts of code, the bulk of a developer's time is typically spent on reviewing, modifying and troubleshooting such code. This can quickly become a daunting task, especially when dealing with complex systems or large-scale projects.

Moreover, the constant context switching (between writing code and reviewing AI-generated code) can lead to reduced human focus. Developers may find themselves continuously shifting their attention between multiple tasks, such as writing code, checking the generated code for errors, and ensuring that the generated code aligns with their intentions. This constant context switching can be mentally taxing and may ultimately hinder productivity, as developers struggle to maintain a deep focus on any single task.

To debunk the learning aspect, it's important to note that while senior engineers already have the hard skills and experience to effectively utilize AI coding tools as supplementary aids, for junior developers, – building a foundation becomes practically impossible with an over-reliance on such tools. Senior engineers can identify the limitations and pitfalls of AI-generated code, treating these tools as mere toys that enhance their workflow. On the other hand, junior developers who heavily rely on AI coding tools may find themselves at a significant disadvantage, struggling to develop the essential skills needed to become proficient programmers.

Hey, if you're wondering what makes good code a good code, I have another episode that covers exactly that topic.

The Hypothesis – What Code Quality to Expect with AI Assistants?

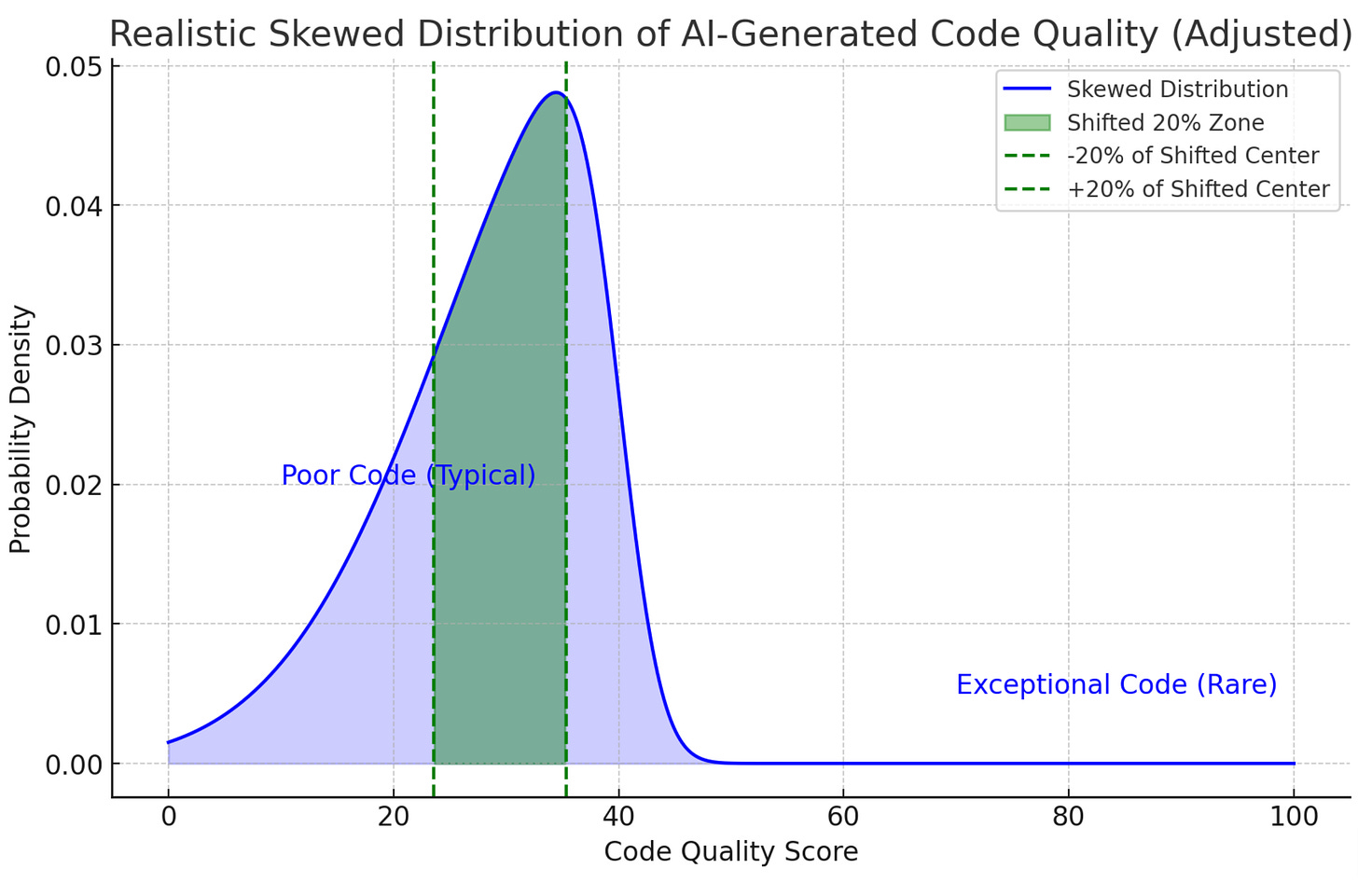

Now we are gradually coming to an interesting hypothesis I came up recently. To better illustrate these concerns, let's consider the principle of Gaussian distribution, also known as the normal distribution. In the context of AI-generated code, we can expect the quality and effectiveness of the code to follow a bell-shaped curve. This is a statistical representation that shows how data tends to cluster around a central point, with fewer instances appearing as you move away from this center.

In our graph, the x-axis represents the code quality score, an abstract score that can describe how good code is ranging from poor to exceptional. The y-axis indicates the probability density, showing how frequently certain quality scores occur within the dataset of AI-generated code. The peak of the curve represents the "most average code," where the majority of the code quality scores are concentrated.

This distribution is directly related to how AI coding assistants are trained. These models are trained on vast amounts of publicly available code from around the world. The training data consists of everything that was both identifiable as a code and openly accessible. As a result, the models learn to generate code that mimics the patterns and characteristics of this global codebase.

The green shaded area highlights the range within ±20% of the mean code quality score. This green section illustrates where a significant portion of the AI-generated code falls, emphasizing that much of the generated code tends to be around average quality. On the left side, we have "Poor Code," representing the small portion of the training data that consists of suboptimal or even erroneous code. Similarly you can find some amount of good "Exceptional Code" on the right part.

This distribution implies that while a small portion of the AI-generated code may indeed be of exceptional quality, a similarly small portion will be of very poor quality. The vast majority of the code, however, will be around the average.

This is what I call my hypothesis. At least that’s what we tend to expect from AI-generated code.

The Reality of AI-Generated Code Quality

From my personal experience spanning years in software development, I've never encountered a codebase where exceptional and suboptimal code were equally distributed. The reality is that AI-generated code follows a significantly skewed distribution, with the curve heavily weighted toward the lower quality end of the spectrum. And that lower-quality part of the world codebase often becomes the basis for the AI-training.

What AI assistants typically produce isn't average code – it's mediocre at best and problematic at worst. The theoretical Gaussian distribution I described earlier represents an idealized scenario that rarely materializes in practice.

We always aim for excellence but land somewhere short of it. It's like training for a marathon with the goal of breaking a record – you push yourself to the limit during practice, hoping that on race day you'll achieve something extraordinary. But the reality? You typically finish somewhat below your target time. Relying (or over-relying) on AI assistants, however, shifts the aim toward what we perceive as average, causing developers to deliver results that often fall below even that standard.

When you rely on AI-generated code without rigorous review, you're not building on stable ground – you're constructing your digital products on a foundation of compromised quality. The technical debt accumulates silently but relentlessly, until one day your development velocity grinds to a halt under the weight of poor architectural decisions and implementation flaws.

What makes this particularly dangerous is how seductive AI coding assistance can be. The code looks clean at first glance. It compiles. It might even pass basic tests. But beneath this veneer of functionality lurks code that lacks the nuanced understanding of edge cases, performance considerations, and maintainability that experienced developers instinctively build into their work.

I'm not suggesting we abandon AI tools – they have their place. But I am advocating for clear-eyed realism about what they deliver.

Projecting the Future of Digital Products with AI Coding Assistants

As we've explored the rise of AI coding assistants, their potential benefits, and the dangers of over-reliance, it's crucial to consider the long-term implications for software industry and the organizations that implement them. In this final chapter, I will try to project the future of digital products in the current state of AI coding tools, acknowledging that while these assistants have room for growth, they still lack the problem-solving abilities of a human being.

Before the integration of AI tools, one of the main factors contributing to the quality of code and, therefore, to organizational growth and success was the caliber of developers hired. Oversimplified, the more skilled developers you have, the stronger the codebase is, and the better the final product is. Although this is a complex problem that still remains unsolved, for the sake of this thought experiment, let's focus only on the moving parts of the system.

Step one: Organizational Success

In the past, the success variable (S) of a company was largely determined by the people it hired. These engineers strived to produce code that was better than average, putting their best efforts into building software that delivers value. However, with the introduction of AI, organizations now have the opportunity to widely adopt this technological benefit and empower every developer with a business version of GitHub Copilot, providing effortless access to improve productivity.

Step two: AI Adoption as an Evolutionary Process

From a psychological perspective, humans are inherently lazy creatures, always seeking the easiest solution to a problem. This means that, sooner or later, with overly accessible AI assistance, developers will start relying entirely on these tools, regardless of the complexity of the task at hand. It's simply how our brains are wired to conserve energy and minimize effort.

According to the principle of least effort people tend to choose the path of least resistance when faced with multiple options to achieve a goal. This psychological concept suggests that as AI coding assistants become more readily available and easier to use, developers will naturally gravitate towards relying on them, even if the generated code is not of the highest quality.

Step three: Evolution Works in Unexpected Ways

As more developers begin to rely on AI tools and adoption spreads significantly, developers write code with the help of these tools or, over time, may completely rely on code generation. One day, the entire business may find itself with a foundational codebase that has dropped from familiar levels of quality (S) to the base level that AI-generated tools can output. At this point, the organization's success variable drops from S to S-N, where N represents the difference between the previously hand-crafted code and the most average code generated by AI assistants.

However, the real problem arises as the organization continues to operate with this new base level (S-N) of coding standards and quality. As it becomes widely accepted and normalized, the organization's output may decline even further.

You may even say at this point, "But stop, no, we have the best guidelines and company policies that won't allow this to happen." But the reality is that changes come in small steps and are often too unexpected. By the time you realize it, it's already too late.

Step four: Dropping Below Baseline

Drawing from economics, we know that when targeting to achieve X, the actual outcome will statistically, roughly, be X-K, where K is a coefficient representing the aggregate inefficiencies and losses in the process, including factors like time delays, resource wastage, communication overhead, and error rates.

In this scenario, given that the new base level is already established, the organization's success variable progresses from the original S to S-N-K, potentially falling below the average output of any LLM-trained coding tool. This harsh possibility once again highlights the importance of striking a balance between leveraging AI and maintaining human oversight (yeah, smells like superalignment).

Step five: The Vicious Cycle

As many organizations worldwide continue to increasingly rely on AI-generated code, a new problem emerges (which we partially covered in one of previous episodes): the scarcity of high-quality, human-written training data. LLMs require vast amounts of data to learn and improve, but as more developers lean on AI coding assistants, the proportion of code written solely by humans diminishes. This scarcity leads to a concerning scenario where the training process for new AI models inevitably includes data that was already produced by previous generations of LLMs, creating a feedback loop where AI learns from code that was, at least partially, generated by AI.

Basically, the models become trapped in a cycle of learning from their own outputs, reinforcing suboptimal coding practices, propagating errors, and limiting innovation.

To represent this degradation, we introduce a new variable, F, which grows with each iteration of the feedback loop. The organization's success variable now becomes S-N-K-F, where S represents the original success variable, N represents the difference between hand-crafted and AI-generated code, K represents the aggregate inefficiencies and losses in the process, and F represents the degradation caused by the feedback loop.

Cautious Optimism

If you decided to just scroll down to this very moment – no problem, here is a little recap especially for you! In today’s episode we've explored the potential benefits and pitfalls of AI coding assistants, it's clear that these tools are here to stay and will continue to shape the future of software development. However, it's crucial to approach this future with a balanced perspective, recognizing the importance of human expertise and oversight in the development process.

In reality, I believe the scenario I described above is a bit exaggerated. While it's true that over-reliance on AI coding assistants can lead to suboptimal code quality and hinder the growth of business as a whole, it's unlikely that entire organizations will completely abandon human-written code in favor of AI-generated code.

Instead, I believe that AI coding assistants have the potential to be a transformative force for good in the world of software development, but only if we use them wisely.

However, for business owners and higher management, it's essential to establish guidelines and best practices for using AI coding assistants within their organizations. This can include:

Encouraging developers to use AI coding assistants as a starting point for code generation, but always reviewing, refactoring, and optimizing the generated code.

Investing in training and education programs to ensure that developers, especially non-senior ones, have a strong foundation in programming principles and best practices, independent of AI tools.

Implementing code review processes that prioritize human expertise and critical thinking, ensuring that AI-generated code is thoroughly vetted before being integrated into the codebase.

Monitoring the use of AI coding assistants within the organization and regularly assessing their impact on code quality, productivity, and developer growth.

By taking a proactive approach to managing the use of AI coding assistants, organizations can harness the benefits of these tools while mitigating the risks associated with over-reliance.

Moreover, as AI technology continues to advance, it's likely that these coding assistants will become more sophisticated, with improved context awareness and problem-solving capabilities. However, it's important to remember that even as AI coding assistants evolve, they will never fully replace the creativity, critical thinking, and problem-solving skills of human developers. The future of software development will be one of collaboration between humans and AI, with each playing to their strengths to create innovative, efficient, and robust digital products. At least that's what I want to believe.

Thanks for exploring the world of AI code generation tools with me today! It's a subject I've been eager to discuss in more detail for a long time. As our world continues to evolve, so should we! Stay tuned for more episodes ahead!

🔎 Explore more:

Bonus track:

Even though Copilot completely shook up the whole software industry and set a new standard, opening up a whole new market niche, you might wonder who came up with the idea.

Alex Graveley, a software engineer at GitHub, was one of the first to lay hands on the OAI code model. Alongside his colleague Albert, they prototyped Copilot and developed the in-the-wild testing harness that's still in use today. Eventually, he came up with the name “Copilot.”

In the end, after working 1.5 years from prototype to production, he received an impressive $20k bonus and a pat on the shoulder. Impressive, isn't it?