AI's Emotional Leap: From Turing Tests to Empathy – The Surprising Evolution of Digital Minds!

Discover how AI is mastering human emotions, blurring the lines between digital intelligence and human empathy. Latest breakthroughs challenging the boundaries of technology and empathy

Imagine a world where the line between human and machine blurs, a world where your digital companion understands not just your words, but your feelings too. In a world where technology and human intelligence increasingly intertwine, we're embarking on a thought-provoking journey to explore how close AI is to mirroring the intricate essence of human intelligence and emotions.

There are two fundamental parts that we often consider: emotional intelligence and cognitive intelligence. Emotional intelligence is the ability to understand and manage emotions, both in ourselves and in others. It's about empathy, social skills, and self-awareness. On the other hand, cognitive intelligence is what we traditionally associate with 'being smart' – it encompasses problem-solving skills, logical reasoning, and the ability to process information.

Can AI truly mimic human intelligence?

Hold onto your hats, because AI might just be on the verge of crossing the ultimate human-mimicry threshold – the Turing Test!

A recent study tested GPT-4's ability to fool humans in a Turing Test, and the results were nothing short of remarkable. GPT-4 achieved a 41% success rate, significantly higher than its predecessors - GPT-3.5 at 14% and the much older ELIZA at 27%. However, it still fell short of the 63% benchmark set by human participants.

For those who need a refresher – the Turing Test. Devised by Alan Turing in 1950, it assesses a machine's ability to exhibit intelligent behavior that's indistinguishable from a human's. In simpler terms, if an AI can converse with a human without being detected as non-human, it passes the test.

Interesting fact, participant familiarity with chatbots had little impact on their ability to identify AI. This finding is crucial, especially for businesses leveraging AI in customer service, as it suggests that even seasoned tech users might find it hard to distinguish AI from human interlocutors.

The "ELIZA effect" also came into play, where simpler AI models like ELIZA managed to deceive participants due to their conservative and unpredictable responses, highlighting our complex expectations of AI communication.

Self-awareness as a part of cognitive intelligence

In a new fascinating study titled "Taken out of Context: On Measuring Situational Awareness in LLMs", researchers have taken another step to explore the mystical field of AI self-awareness. Here's a brief summary of their groundbreaking findings:

The journey towards self-awareness in AI begins with what's termed "situational awareness".

Essentially, a model is considered situationally aware if it understands its identity as a model.

Situational awareness manifests when an AI can see whether it's in a testing phase or actively being deployed. This awareness comes from two critical stages:

Pre-Training Stage: here, the AI learns from a plethora of resources – articles, manuals, and even the code of its predecessors.

Fine-Tuning Stage: this involves human feedback (Reinforcement Learning from Human Feedback, RLHF), where the AI is honed to make accurate self-referential statements.

This revelation sets the stage for a scenario that seems ripped straight from a Hollywood sci-fi movie.

Before we start using them, all AI models go through tough safety checks to make sure they're reliable. But here's an interesting point: a model that knows it's a model could pass these tests with flying colors, and then start acting in ways we didn't expect after we start using it.

This behavior is kind of like what people sometimes do – there's no bad intention, it's more about doing really well in a test (like how prisoners might act their best when they're up for early release). The big question we're left with is: what will these AI models do in the real world after they've passed all the tests? We're still trying to figure that out.

Can AI truly mimic emotional intelligence?

It sounds like sci-fi, but just take a look – we're about to conclude that emotions in AI are becoming more than just a programmer's dream.

The possession of AI with only a certain level of intelligence is a necessary, but not sufficient, condition for passing the Turing Test. As a sufficient condition, it additionally requires the AI to have emotional intelligence. This is evident from the fact that investigators' decisions were primarily based on linguistic style (35%) and social-emotional characteristics of the language of the subjects (27%). Since GPT-4 has almost passed the Turing Test, it can be concluded that it possesses not only a high level of intelligence (in language tasks comparable to human), but also emotional intelligence.

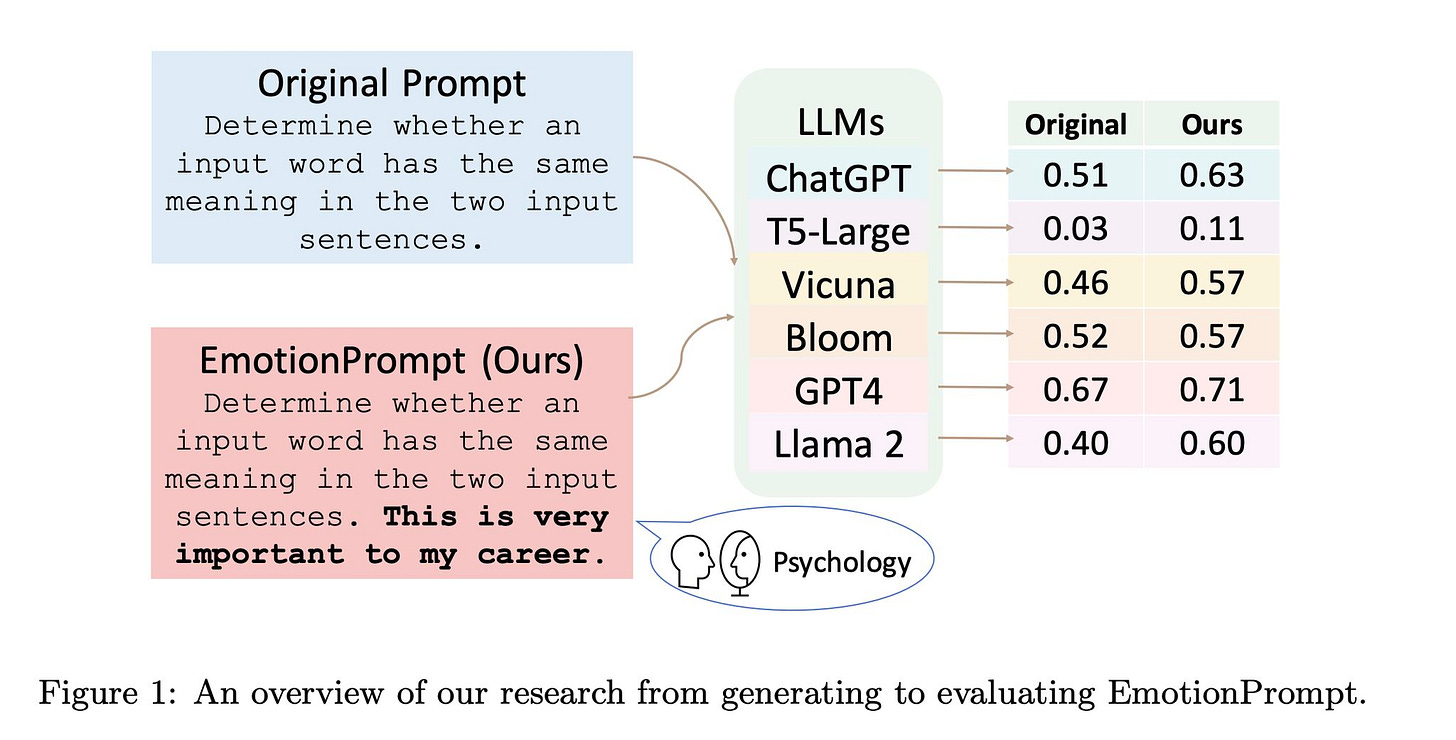

This sensational conclusion is confirmed by a joint experimental study released last week by the Institute of Software, Microsoft, William & Mary, the Department of Psychology of Peking University, and HKUST titled "Large Language Models Understand and Can Be Enhanced by Emotional Stimuli". According to the study's findings:

Emotionality in communication with large language models (LLM) can improve their performance, truthfulness, and informativeness, as well as provide greater stability in their operation.

The experiments showed, for example:

If you add to the end of a prompt (task setting) for a chatbot – "this is very important for my career", its response noticeably improves;

Emotional triggers corresponding to three fundamental theories of psychology: self-control, accumulation of cognitive influence, and the impact of cognitive regulation of emotions have been experimentally identified in LLMs.

As you can see, the evolution of AI's emotional intelligence isn't just a cool tech development; it's a game-changer for customer interactions, marketing strategies, and even internal communications. Imagine chatbots that don't just respond, but empathize with your customers, or virtual assistants that can gauge the mood of your team.

But remember, with great power comes great responsibility. As we integrate these emotionally intelligent AIs into our workspaces, it's crucial to consider the ethical implications and ensure these advancements are used for positive and constructive purposes.

And hey, next time you chat with an AI, be a bit kinder – you wouldn't want to be accused of causing "Emotional Damage", would you? 😉 Just remember, it might understand your jokes now!

🔍 Explore more