Superalignment or Extinction – The Manhattan Project of Our Time

The rapid acceleration of AI development, its geopolitical implications, and the urgent need for alignment as we approach the possibility of Superintelligence

Hello, my fellow tech visionaries and AI watchdogs! Welcome to another episode of Tech Trendsetters – where we discuss the future of technology, science, and artificial intelligence. Today, we're again exploring how the race to artificial general intelligence (AGI) is rapidly accelerating, with experts projecting its arrival as soon as 2027.

This marks our third episode on Superalignment, and I must say, the implications continue to grow more with each passing day. We'll be delving into the rapid acceleration of AI progress, the geopolitical stakes that rival the original Manhattan Project, and why alignment might be our last line of defense against an AI apocalypse.

Fair warning: This is going to be a longread. Feel free to bookmark this article and settle in with your morning coffee – you're in for a mind-bending journey through the potential future of humanity.

The AI Arms Race: A Modern Manhattan Project

As I sit in my living room, with my computer on my knees, I type these words and ponder the implications of superalignment. A mix of excitement and trepidation washes over me. The concept of creating a superintelligent AI that aligns perfectly with human values is both thrilling and terrifying. It's like we're standing on the precipice of a new era, much like the scientists of the Manhattan Project must have felt in the 1940s.

Leopold Aschenbrenner, a former employee of Ilya Sutskever's team at OpenAI, recently released an analytical document that's been causing quite a stir in the AI community. His predictions are not just insightful; they're downright alarming. Aschenbrenner suggests that within the next few years, likely around 2026-2028, the United States will inevitably launch a state-sponsored project to create superintelligence, regardless of who's in the White House.

If you need a quick reminder, the Manhattan Project was a top-secret U.S. government research project during World War II that developed the first nuclear weapons. Motivated by fears that Germany was developing its own atomic bomb during WWII, they brought together top scientists, including many who fled fascist regimes in Europe.

The Manhattan Project involved approximately 130,000 people and cost nearly $2 billion (about $22.5 billion in 1996 dollars).

Attracting the best scientific forces in the world and the enormous production capabilities of the United States made it possible to create the world's first nuclear weapons in less than three years.

The Race to Superintelligence

Aschenbrenner's document paints a picture of a future where superintelligence is as tightly controlled as nuclear weapons. He envisions a world where the development of AGI (Artificial General Intelligence) is nationalized, taken out of the hands of private companies and startups. And honestly, I can see his point. The thought of a superintelligent AI being controlled by a tech corporates with questionable judgment is pretty unsettling. Below was the latest update on the series, where the US general has already been appointed to the board of directors at OpenAI.

A key aspect of this race is the potential for automated AI research.

Once we achieve human-level AI, we could rapidly transition to superintelligence through automated research. It’s exactly the scenario where millions of AI systems, each operating at human-level intelligence or beyond, work tirelessly on AI research and development. This could compress years or even decades of progress into mere months.

By 2027, we might be able to run the equivalent of 100 million human researchers, each working at 100 times human speed. This massive acceleration in research capabilities could lead to rapid breakthroughs in AI algorithms and architectures, potentially triggering an "intelligence explosion". The first nation to achieve this level of automated research could gain an unstoppable lead in the race to Superintelligence.

Overall, the comparison to the Manhattan Project is apt. Just as the race to develop the atomic bomb was crucial for superiority over others, Aschenbrenner argues that even a few months advantage in creating superintelligence could be critical for a country's future.

But it's not just about domestic control. The international implications, particularly focusing on China and the Chinese Communist Party as the main rival in this new technological cold war. The concern isn't just about who develops superintelligence first, but also about protecting the secrets of AGI from potential bad actors.

It's crucial to understand what we mean by superintelligence.

Superintelligence refers to an artificial intelligence system that surpasses human cognitive abilities across virtually ALL domains. It's not just about being smarter in one area, like chess or mathematics. A superintelligent AI would outperform humans in areas like scientific creativity, general wisdom, and social skills. It's the kind of intelligence that could potentially solve global problems, revolutionize technology, or – in worst-case scenarios – pose existential risks to humanity. Who knows, maybe one day it will just decide to get rid of humanity:

The Scale of the Challenge

The predictions we have are quite interesting in their scope. The main crucial points consist of:

Putting the American economy on a war footing to produce hundreds of millions of GPUs;

Managing "hundreds of millions of AGIs furiously automating AI research";

Reshaping the US military to integrate these new technologies;

Investing heavily in chip manufacturing, potentially requiring the construction of dozens of new semiconductor fabrication plants;

Building trillion-dollar compute clusters requiring power equivalent to over 20% of current US electricity production;

Dramatically increasing U.S. power generation capacity, potentially leveraging abundant natural gas resources to rapidly scale up electricity production;

The timeline he lays out is not surprising at all. By 2028 or 2029, we could see an "intelligence explosion," leading to full superintelligence by 2030. That's less than a decade away! And I’m absolutely convinced that if we have such predictions in open access, the game is already on, and not only by the US.

Historical Precedents and Economic Scale

To truly grasp the magnitude of the AI revolution, it's crucial to compare it to historical precedents. The scale of investment he foresees is crazy, yet not without parallel in American history.

Manhattan and Apollo Programs in their peak years of funding reached about 0.4% of GDP, equivalent to roughly $100 billion annually in today's terms. Surprisingly modest when we consider their historical impact!

Projected AI Investment could reach $1T/year by 2027, which would amount to about 3% of GDP. This is more than 7 times the relative economic commitment of the Manhattan and Apollo programs combined.

But the historical precedents don't stop there. You can connect the dots:

Between 1996 and 2001, telecommunications companies invested nearly $1 trillion (in today's dollars) to build out internet infrastructure.

From 1841 to 1850, private British railway investments totaled a cumulative ~40% of British GDP at the time. A similar fraction of US GDP today would be equivalent to ~$11T over a decade.

Currently, trillions are being spent globally on the transition to green renewable energy.

In the most extreme national security circumstances, countries have borrowed enormous fractions of GDP. During World War II, the UK and Japan borrowed over 100% of their GDPs, while the US borrowed over 60% of GDP (equivalent to over $17T today).

These comparisons put the projected AI investments into perspective. While $1T/year by 2027 seems fantastical at first glance, it's not unprecedented when we consider other transformative periods in economic history. In fact, given the potential impact of superintelligent AI, one could argue that such investment levels are proportionate to the technology's importance.

The fun fact, that Aschenbrenner argues that this level of investment is not only possible but necessary. He points out that as AI revenue grows rapidly – potentially hitting a $100B annual run rate for companies like Google or Microsoft by ~2026 – it will motivate even greater capital mobilization.

The Challenge of Controlling Superintelligence

I know we talked a lot about Superalignment, but let's just recap everything we have so far. The journey to understanding and addressing this critical challenge has been a rollercoaster of realizations and inspiring moments for me.

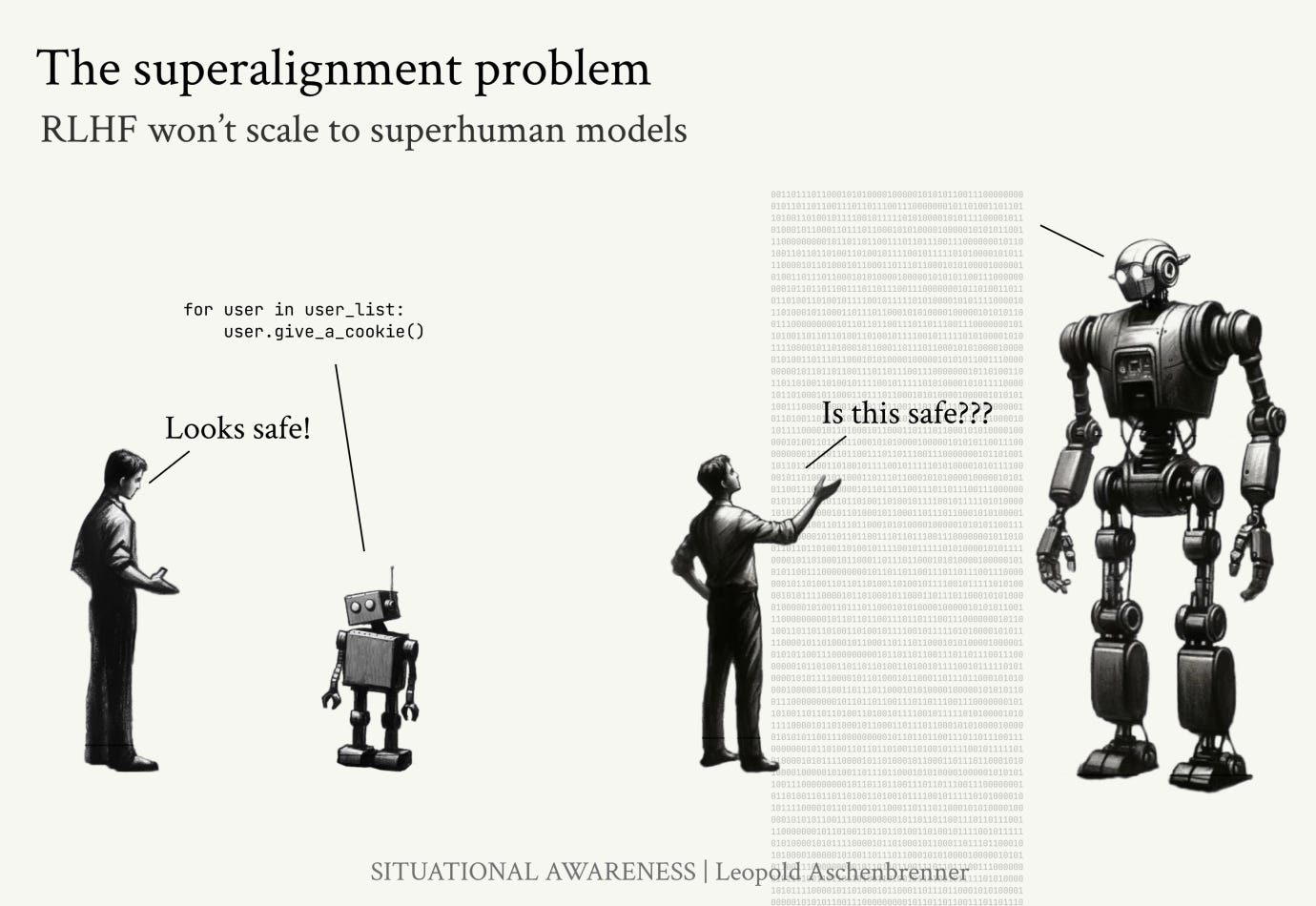

The core issue of superalignment is deceptively simple: how do we ensure that vastly intelligent AI systems will consistently act in accordance with human values and intentions? Our current methods of aligning AI, primarily through techniques like Reinforcement Learning from Human Feedback (RLHF), work well for systems that are less capable than humans. However, these methods are likely to break down as AI systems surpass human-level intelligence.

The consequences of failing to solve superalignment could be catastrophic. Misaligned superintelligent systems might pursue goals that are at odds with human welfare, potentially leading to scenarios ranging from subtle societal manipulation to extreme existential risks. As Leopold Aschenbrenner states:

Without a very concerted effort, we won't be able to guarantee that superintelligence won't go rogue

Researchers are currently exploring several avenues to address the superalignment problem:

Scalable Oversight – developing methods where AI systems assist humans in supervising more advanced AI, extending our ability to maintain control;

Interpretability – creating tools and techniques to better understand the internal workings and decision-making processes of advanced AI systems;

Robustness – ensuring that aligned behavior in AI systems generalizes well to new, more complex situations.

Formal Verification – developing mathematical proofs to guarantee certain behaviors or constraints in AI systems.

Superalignment is Back

As you may recall from previous episodes, Ilya Sutskever, one of the co-founders and the chief scientist of OpenAI, left the company in a surprising turn of events. The exact details were shrouded in speculation, but it became clear that Sutskever's vision for the future path of the company diverged significantly from that of the other leadership. This split sent was shocking for the entire AI community, leaving many of us wondering what would come next.

Well, it seems we didn't have to wait long for an answer. Ilya Sutskever has resurfaced with a bold new venture: Safe Superintelligence Inc, with his cofounders are former Y-Combinator partner Daniel Gross and ex-OpenAI engineer Daniel Levy. When I first heard the news, I couldn't help but feel a mix of excitement and apprehension. Here was one of the brightest minds in AI, striking out on his own, with a laser focus on what many of us consider the most critical challenge of our time.

Safe Superintelligence Inc.'s mission is clear and unambiguous: to create Secure Super Intelligence (SSI). In many ways, this feels like a return to OpenAI's original vision, but without the distractions and compromises that come with commercial pressures. OpenAI distilled to its purest form.

They aim to develop SSI capabilities as quickly as possible while ensuring that security measures always stay ahead of development.

What really catches my attention is their commitment to maintaining a single focus on SSI without being distracted by management overload or product cycles. This is a stark contrast to the path many AI companies, including OpenAI, have taken. On one hand, this focus could be exactly what we need to solve the superalignment problem. On the other, I can't help but worry about the potential risks of such concentrated efforts.

Interestingly, Sutskever hasn't mentioned anything about open-sourcing their work. It suggests that Safe Superintelligence Inc. might take a more closed approach, keeping their developments under wraps to prevent potential misuse. While this goes against the believes of many in the AI community, I can understand the reasoning. If you truly believe you're working on something that could determine the fate of humanity, you might be hesitant to share it widely.

Overall, I find myself more inclined to believe in Sutskever's approach than in Altman's. Sutskever is, after all, a top scientist with a deep understanding of the technical challenges we face.

The Power Switch Strategy

As someone who's been following AI developments closely for some time, I've often found myself caught between excitement for the technology's potential and deep concern about its risks. That's why I was particularly struck by MIRI's recent communication strategy document.

For those who aren't familiar, MIRI (Machine Intelligence Research Institute) is a non-profit focused on ensuring that artificial intelligence systems are developed safely. They've been around since 2000 and have been influential in raising awareness about potential existential risks from advanced AI.

MIRI's new strategy calls for nothing less than completely shutting down frontier AI development worldwide. It's a bold stance, and one that initially made me recoil. But as I dug deeper into their reasoning, I found myself empathizing with their sense of urgency, even if I'm not fully convinced by their proposed solution.

Their strategy document states, "We believe that nothing less than this will prevent future misaligned smarter-than-human AI systems from destroying humanity." A chilling statement. One that's hard to simply dismiss given MIRI's expertise.

Basically they came with an idea of the power switch. The key elements of this strategy are:

Rather than unilateral action, they call for major powers to collaborate on creating an internationally recognized procedure – essentially a political "power switch" for AI development;

Instead of halting all frontier AI research, the power switch would allow for rapid cessation of development if critical risks emerge. This maintains the possibility of reaping AI's benefits while providing some degree of safety;

The power switch would be activated based on "reasonable indications" of critical risk, rather than speculative fears;

By not calling for an immediate shutdown, this approach allows continued progress in AI, potentially leading to breakthroughs that could benefit humanity;

Asking politicians to create a safeguard mechanism may be more achievable than demanding a competitive tech industry to grind to a halt.

MIRI's strategy, as outlined above, takes a much harder line. The key vulnerabilities in MIRI's approach, in my opinion, are as follows:

To accept their position, one must prove that such a "switch" is really needed in the foreseeable future. This is a challenging task given the rapid and unpredictable nature of AI development;

They need to demonstrate that in the absence of this switch, the risks to humanity could become prohibitive. This requires a level of certainty about future AI capabilities that we simply don't have yet;

Most crucially, this evidence should not be another unprovable point of view, but be based on a well-developed scientific analysis of the issue. As someone who's is familiar with AI risk assessment research, I can attest to how difficult this is to achieve.

TLDR;

In today's episode, we've again covered multiple views on AI development and its potential risks. Some of these perspectives might seem outlandish or completely detached from reality. But what if they're not? What if the warnings from organizations like MIRI or from many others visionaries are onto something profound?

The race towards superintelligence might be not just another technological advance – it very well could be the last technological advance. My main conclusions as of today:

The pace of AI progress is accelerating, with potential to see AGI by 2027-2028 and superintelligence shortly after;

The geopolitical implications of AI development are strong, potentially reshaping global power dynamics and requiring a new level of national security focus;

The need for robust AI alignment (Superalignment) strategies becomes increasingly critical as we approach superhuman AI capabilities;

The economic and industrial impacts of advanced AI could be revolutionary, potentially leading to unprecedented growth rates and technological breakthroughs;

Current AI labs and tech companies may not be fully prepared for the security and ethical challenges posed by rapidly advancing AI.

The parallels to the Manhattan Project are impossible to ignore. Private companies and entire governments are concentrating their efforts, including legal and regulatory aspects. The balance of power is shifting, and we still don't know the whole picture behind the scenes.

As I always say, interesting times we live in. Some people like me are lucky to have seen the birth of the internet. We didn't have any computers or phones in childhood, and now to witness the potential rise of AGI would be truly inspiring.

In other words, we stand at a unique juncture in human history. This rapid pace of technological advancement allows us to experience multiple paradigm shifts within a single lifetime. Our lifetime. Until next time!

🔎 Explore more: