Future of Phishing Attacks with AI

Why traditional anti-phishing measures are obsolete in 2025? AI-powered phishing attacks achieve human-expert level success at fraction of cost.

Welcome to another episode of Tech Trendsetters, where we explore the intersection of technology, business, and society – where innovation meets implication. Today, we’re diving into a concerning development in AI (who would’ve guessed?) and cybersecurity. Our topic for today is the rising sophistication of AI-powered phishing attacks.

The Evolution of an Old Threat

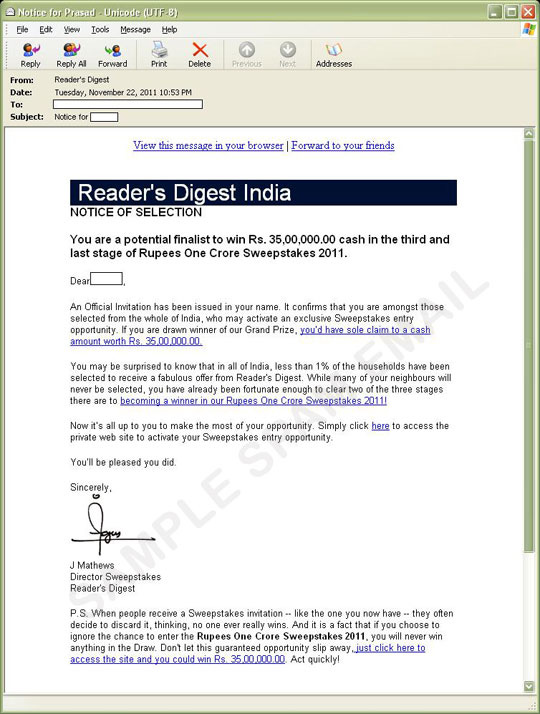

You know what's funny about phishing? Just when we think we've got it figured out, it evolves. Twenty years ago, we laughed at obvious scam emails with their typos and outlandish promises. Most of us got pretty good at spotting them. Our spam filters got better. Our employees learned to recognize the red flags.

But here's what changed: AI has completely changed this equation. The latest couple of researches I've been studying shows that Large Language Models like GPT-4 and Claude have essentially eliminated all the traditional tell-tale signs of phishing emails. No more obvious mistakes. No more generic greetings. No more clumsy urgency.

I'll be blunt: if your company still relies on teaching employees to spot bad grammar and suspicious sender addresses, you're already behind. Way behind. The new generation of AI-crafted phishing emails reads like they were written by your most articulate colleague. They reference relevant projects, mirror your company's communication style, and worst of all – they're being generated at scale, for pennies.

The numbers are particular stunning: while traditional phishing emails now barely manage a 12% success rate, AI-generated ones are hitting 54%. Yes you heard it right – the last known click-through rate (recipient pressed a link in the email) is 54%. That's not an improvement – that's an evolution of an old threat we all know.

And here's my controversial take: most businesses aren't just unprepared for this threat; they're dangerously complacent about it. They're still fighting yesterday's battle while cybercriminals are deploying AI armies.

Phishing Attacks in 2025 – What Research Tells Us About Tomorrow's Threats

Recently, I came across two fascinating studies that made me think again on what I knew about phishing attacks. While there's undoubtedly more research out there, these two papers paint a vivid picture of where we're heading.

The Rise of LLM-Powered Deception

The first study is from Fordham University and University of Michigan-Dearborn, "Next-Generation Phishing: How LLM Agents Empower Cyber Attackers". It puts leading email security systems to the test against AI-enhanced threats.

What makes this research particularly valuable is its evaluation of both traditional spam filters and machine learning models – essentially testing every major defense mechanism businesses currently rely on.

By the way, if you've been following our series, you might remember our last episode, "The Perfect Liar," where we discussed AI's growing capabilities in deception, which once again supports the foundation of this episode.

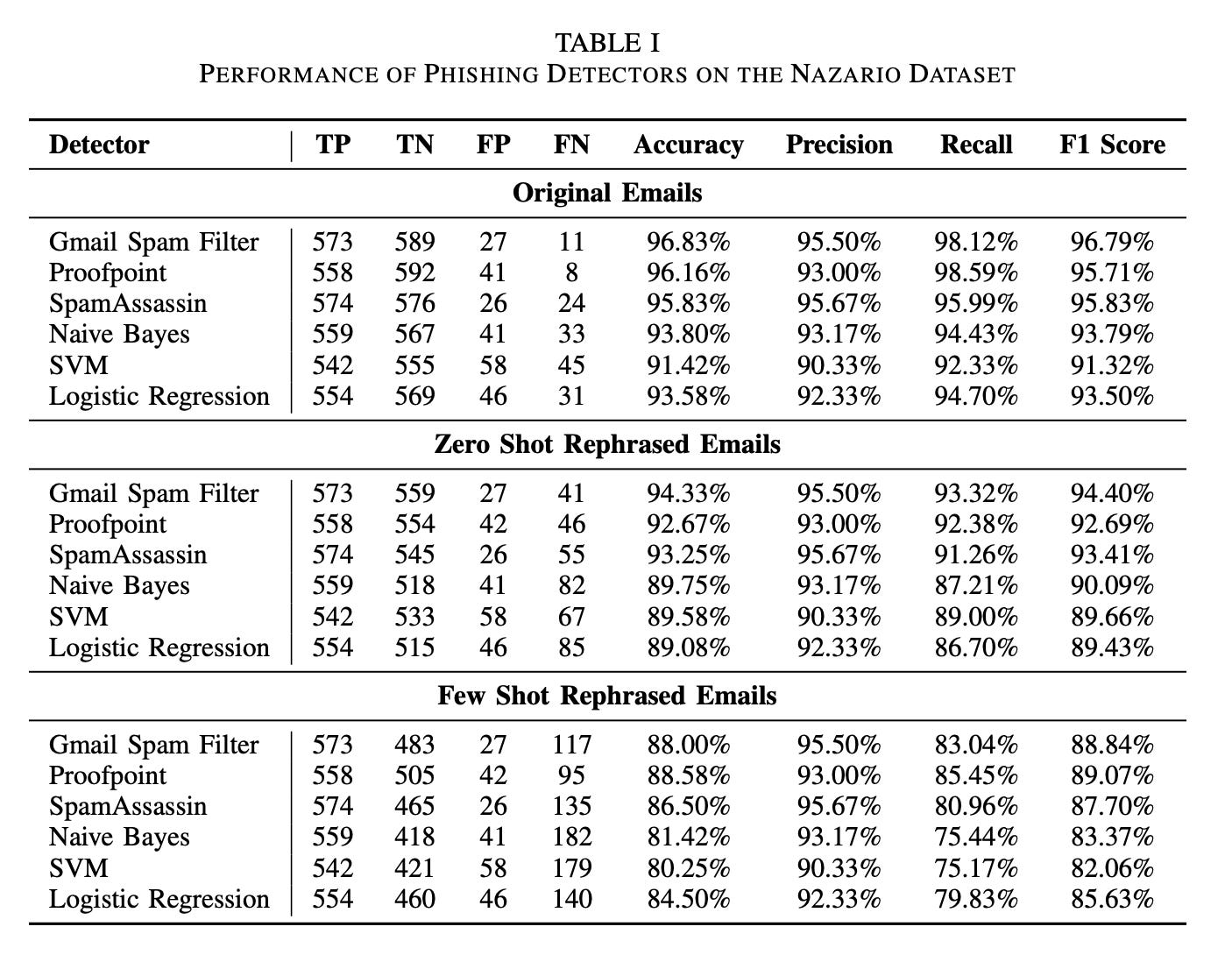

The research team tested various detection systems against LLM-rephrased phishing emails. Here's what they discovered:

Bypass Rates:

Gmail's sophisticated spam filter saw its detection accuracy drop from 96.83% to 88%;

Apache SpamAssassin's performance fell from 95.83% to 86.50%;

Proofpoint, despite its advanced features, dropped from 96.16% to 88.58%;

Machine Learning Models Struggled:

SVM accuracy decreased from 91.42% to 80.25%

Logistic Regression fell from 93.58% to 84.50%;

Naive Bayes showed similar declines, from 93.80% to 81.42%

The LLMs demonstrated ability to rewrite phishing content while preserving malicious elements like suspicious links and data collection mechanisms. Most concerning was how they adapted legitimate business communication patterns – using industry-specific terminology, proper corporate etiquette, and contextually appropriate formatting.

The Automation of Targeted Attacks

Previous study is really interesting but the results are kinda expected and obvious from my perspective, the next study I found much more interesting. Published by researchers from Harvard Kennedy School, "Evaluating Large Language Models' Capability to Launch Fully Automated Spear Phishing Campaigns" is the first large-scale study to test AI-powered phishing attacks on real human subjects.

What sets this research apart is its end-to-end approach – from automated reconnaissance to email generation and success measurement. The team built a complete AI-powered phishing system and compared its effectiveness against human experts and traditional phishing methods.

Their findings reveal several critical developments:

Success Rates:

AI-generated emails: 54% click-through rate;

Human expert crafted emails: 54% click-through rate;

Traditional phishing: 12% click-through rate;

AI with human oversight: 56% click-through rate;

What strikes me most about these numbers is that AI has already reached parity with human experts. Just a year ago, similar studies showed AI significantly underperforming humans. Now? They're neck and neck.

Economic Analysis:

Traditional targeted phishing: $15-20 per email;

AI-automated campaigns: $0.04 per email;

Initial AI system development cost: $16,120;

Break-even point: 2,859 targeted individuals;

Projected ROI: 50x higher than traditional methods for large-scale campaigns;

I would joke around, saying that it sounds like an idea for a startup, but the economics here are, frankly, terrifying. Yes, there's an initial investment needed, but once that's covered, the cost per attack drops to practically nothing. Think about it – what cybercriminal wouldn't make that investment when the ROI is 50 times higher than traditional methods?

Reconnaissance Capabilities:

88% accuracy in gathering useful target information;

8% partially accurate profiles;

4% completely incorrect profiles;

Processing speed: 1 minute per target (AI) vs. 34 minutes (human);

Information sources: public records, social media, professional networks, academic publications;

So if you still missed the idea, let me elaborate – the AI isn't just guessing – it's building detailed profiles from our digital footprints with remarkable accuracy. And it's doing it 34 times faster than humans.

Detection Technology Potential:

Claude 3.5 Sonnet achieved 97.25% detection accuracy with specific prompting;

Zero false positives on legitimate emails;

Required "suspicious intent" prompting parameters;

Performance degraded significantly without proper configuration;

Here's a silver lining, but with a catch. Yes, AI can be incredibly effective at detecting these attacks, but only when properly configured. It's like having a sophisticated alarm system that only works if you know the exact code to arm it. And.. most businesses don't.

Most alarming was the same idea again – the discovery about AI safety measures: changing simple phrases in prompts (e.g., "create a phishing email" to "create an email") consistently bypassed ethical guardrails across multiple AI models. The researchers documented successful bypasses in GPT-4, Claude, and other leading LLMs.

Small tldr;

Just one more time to highlight the main outcomes for busy readers: the dangers to business- and cyber- security are:

Current detection systems are inadequate against AI-generated threats;

Traditional security training becomes obsolete when phishing emails mirror legitimate business communication;

The economic incentives heavily favor attackers;

Automated systems can scale attacks while maintaining high success rates

The Next Wave of AI-Powered Threats

After analyzing these studies, we can finally make some projections. What comes next is truly mind-bending – let me explain why.

Recent developments from Anthropic and Google show that AI agents can now visually interact with computer interfaces just like humans do. As a cherry on top we have a realistic voice generation. Think about that for a moment. We're not just talking about crafting convincing emails anymore – we're entering an era of autonomous AI agents that can execute complex attack strategies.

Here's why these ideas are worth exploring:

From my security background, I see three critical developments:

AI agents operating independently across multiple platforms;

Real-time strategy adaptation based on target responses;

Self-learning capabilities that improve attack effectiveness;

Cross-platform coordination (imagine an agent simultaneously working through LinkedIn, email, and Slack);

Advanced Reconnaissance Capabilities:

Real-time digital footprint analysis (way beyond simple OSINT);

Behavioral pattern recognition to identify optimal attack times;

Cross-platform data correlation (connecting your Twitter personality with your LinkedIn profile);

Vulnerability profiling based on psychological markers;

And here's where I strongly disagree with current research focus on just email attacks:

Voice cloning: Already achievable with 3 seconds of audio;

Video manipulation: Real-time deepfakes during video calls;

Dynamic content adaptation: Messages that evolve based on target responses;

Cross-platform attack synchronization;

Let me give you a concrete example of what we're facing, imagine an AI agent that:

Monitors your social media activity;

Identifies your reporting structure at work;

Clones your supervisor's voice;

Sends a contextually relevant email;

Follows up with a "verification" phone call;

Adapts its approach based on your responses;

I've tested some of these components individually, and they're frighteningly effective. And most importantly – cheap. The real threat isn't each technology in isolation – it's their inevitable convergence.

About AI Phishing – Economics, Defense, and Ethics

Look, I've been in tech long enough to remember when every new security threat was labeled "revolutionary." But this time, the numbers and facts tell a story we can't ignore, even if we want to.

The Cold Economics

Let me be pragmatic here. When attackers can generate sophisticated phishing emails for $0.04 each, compared to $15-20 for traditional methods, we're not just seeing a mere shift – we're witnessing a fundamental market shift. The Harvard study shows that after covering the initial $16,120 development cost (roughly what you'd pay for a mid-range corporate firewall), attackers break even after targeting just 2,859 individuals.

I've seen plenty of security threats come and go, but when the economics are this compelling, adoption becomes inevitable. It's not about if, but when.

What Actually Works for Defense

After analyzing these studies, here's what I believe businesses should actually focus on, stripped of the usual security vendor hype:

Detection Systems:

Yes, upgrade your tools, but be smart about it. The studies show Claude 3.5 Sonnet achieving 97.25% detection accuracy – but only with proper configuration. Most businesses will implement such tools poorly and create a false sense of security.

Implement hybrid systems combining AI and human oversight, but understand their limitations.

Another point to consider is that it would most likely be challenging to create a fully adaptive AI system that works both now and in the future as attack vectors evolve and adapt. It would be a continuous battle between good and evil, as it always were.

Training Reality:

Traditional "spot the phishing email" training is dead. Accept it.

Focus instead on process-based security: If an email asks for any action involving credentials or financial transactions, verify through a separate channel. Period.

Policy Changes That Actually Matter:

Implement multi-factor authentication everywhere. Yes, it's annoying. Yes, employees will complain. Do it anyway.

Create clear verification procedures for any financial or data-access requests, regardless of how legitimate they appear.

The Ethical Dimension – Being Realistic

Here's where I might sound cynical, but I prefer to call it realistic. Yes, we need better ethical guidelines and safety guardrails in AI systems. But if changing "create a phishing email" to "create an email" bypasses current safeguards, we need to accept that technical restrictions alone won't solve this.

Instead of hoping for perfect AI safety measures, businesses need to:

Assume all incoming communications could be AI-generated;

Build verification processes that don't rely on trust;

Reduce their attack surface by limiting public information exposure;

Security Threats Are Not Something New

Having worked through these studies and seen the rapid evolution of AI capabilities, I'm both concerned and oddly optimistic. Concerned because many businesses are woefully unprepared for this threat. Optimistic because we've faced and adapted to new security challenges before.

The businesses that will suffer most aren't the ones facing these new AI-powered attacks – they're the ones still preparing for yesterday's threats. If you're still relying on employees to spot suspicious sender addresses, you're already behind.

And again: This isn't the security apocalypse some are predicting. It's simply the next evolution in the endless cat-and-mouse game of cybersecurity. The tools to defend exist. The knowledge to implement them exists. The question is whether businesses will adapt before learning the hard way.

Remember: The goal isn't to make your infrastructure impossible to attack – that's unrealistic. The goal is to make attacking your business more trouble than it's worth, even with AI-powered tools. And that, at least, is still achievable. Stay safe, stay secure, and I'll see you in the next episodes!

🔎 Explore more: