Software 3.0: How English Became the New Programming Language

LLMs are the new operating system – from partial autonomy to agent-ready infrastructure, your guide to surviving Software 3.0 and why every software company must rebuild for this reality

Software is changing. Again. And this time, it's a fundamental shift we haven't seen in 70 years. Andrej Karpathy recently delivered a cool talk at Y Combinator's AI Startup School, and his perspective aligns with what I've been saying all along: LLMs aren't just another tool – they represent an entirely new computing paradigm. We now program these systems in English. Plain, natural, everyday English.

The Great Software Paradigm Shift

Here's what most people are missing: this isn't just another tech upgrade. This is the computing equivalent of moving from horses to cars.

Most people (and they're really smart) I talk to still think LLMs are glorified chatbots. They're catastrophically wrong. While they're debating whether to add a chat feature to their app, entire industries are being rebuilt from the ground up.

Today, I'm going to break down Andrej Karpathy's (former co-founder at OpenAI) point of view on LLMs and tell you exactly why this matters for your business or organization, and why I think it is a really shift in paradigm.The kind of shift that happens maybe twice in seventy years.

Three Eras of Software

So let’s think together what's happening here.

Software 1.0 is everything you know about programming. Humans write explicit instructions in specialized languages. C++, Python, JavaScript – the works. You specify exactly what the computer should do, step by step. This built our entire digital world over the past seventy years.

Software 2.0 emerged with neural networks. Instead of writing code directly, you curate datasets and run optimizers to tune model weights. The "program" becomes the weights of the neural network. I saw this firsthand in various AI projects – it's powerful but requires deep technical expertise.

Now we have Software 3.0, and it's genuinely revolutionary. Your prompts are programs. The programming language is English. Think about the implications of that statement. The barrier to entry for software creation just collapsed to almost zero for anyone who can communicate in natural language.

A perfect example – for sentiment analysis, you can write hundreds of lines of Python code, train a neural network on labeled data, or simply prompt an LLM: "Analyze the sentiment of this text." Same result, radically different approach.

I've also experienced this shift firsthand. Despite not knowing the game engine's frameworks, I built a simple fully-working game in a couple of days using just English prompts. Even though I'm still a software engineer, it's kinda easier for me, but the traditional gatekeepers of software development (the years of learning syntax, frameworks, and debugging arcane errors) suddenly matter less than your ability to clearly communicate what you want.

LLM Psychology: Working with People Spirits

Before you can build effective products with LLMs, you need to understand what these systems actually are. LLMs aren't sophisticated databases. They're not reasoning engines. They're what Karpathy brilliantly calls "people spirits" – stochastic simulations of human behavior, trained on the entirety of human digital output.

This means they have distinctly human-like psychology, complete with superhuman capabilities and very human limitations. They can remember obscure facts, perform complex reasoning, and generate creative solutions. But they also hallucinate, make bizarre logical errors, and suffer from what's essentially digital amnesia – unable to learn or remember across sessions.

I've been working with these systems long enough to recognize the pattern. They're like working with a brilliant colleague who has perfect recall but occasionally insists that obvious facts are wrong. They'll solve complex problems elegantly, then fail at basic arithmetic.

This jagged intelligence profile has massive implications for product development. Too many companies are building AI products as if LLMs are infallible reasoning machines. And this way setting themselves up for catastrophic failures.

The successful companies (the ones building sustainable AI products) understand that LLMs are powerful but fallible tools that require human oversight. They're designing systems that amplify human capabilities rather than replacing human judgment.

LLMs as the New Operating System

The LLM is the CPU – the core compute unit of this new paradigm. The context window functions as RAM, managing working memory for problem-solving. Just like early computing, high costs force centralization in the cloud, with all of us as thin clients sharing resources through time-sharing.

The parallels to the 1960s mainframe era are striking. We're in the pre-personal-computer phase of this new computing paradigm. Right now, LLM compute is too expensive for personal ownership, so we access it through APIs. But this will change, just like it did with traditional computers.

What's fascinating is the ecosystem developing around this. We have closed-source providers (OpenAI, Anthropic) competing with open-source alternatives (Llama ecosystem). It's Windows vs. Linux all over again, but for intelligent systems.

When major LLMs go down (which actually happened recently) it creates an "intelligence brownout." Businesses that have integrated these systems suddenly lose capability. We're becoming dependent on this infrastructure faster than most people realize.

Partial Autonomy: The Only Viable Strategy

Here's another thing where most AI companies are getting it spectacularly wrong: they're chasing full autonomy. They want to build agents that can operate independently, make complex decisions, and execute tasks without human intervention.

This is a fantasy. And it's a dangerous one.

The autonomous vehicle industry has been promising full self-driving for over a decade. Billions of dollars invested, thousands of engineers working on the problem, and we're still nowhere close to true autonomy. Even today's "driverless" cars require extensive human monitoring and intervention.

The clear pattern we have: complex, real-world automation is really, really hard. Anyone telling you that 2025 is "the year of agents" hasn't learned the lessons of the past decade.

The companies that are succeeding with AI understand this. They're building what Karpathy calls "partial autonomy" products – systems with an autonomy slider that lets users control how much delegation they're comfortable with.

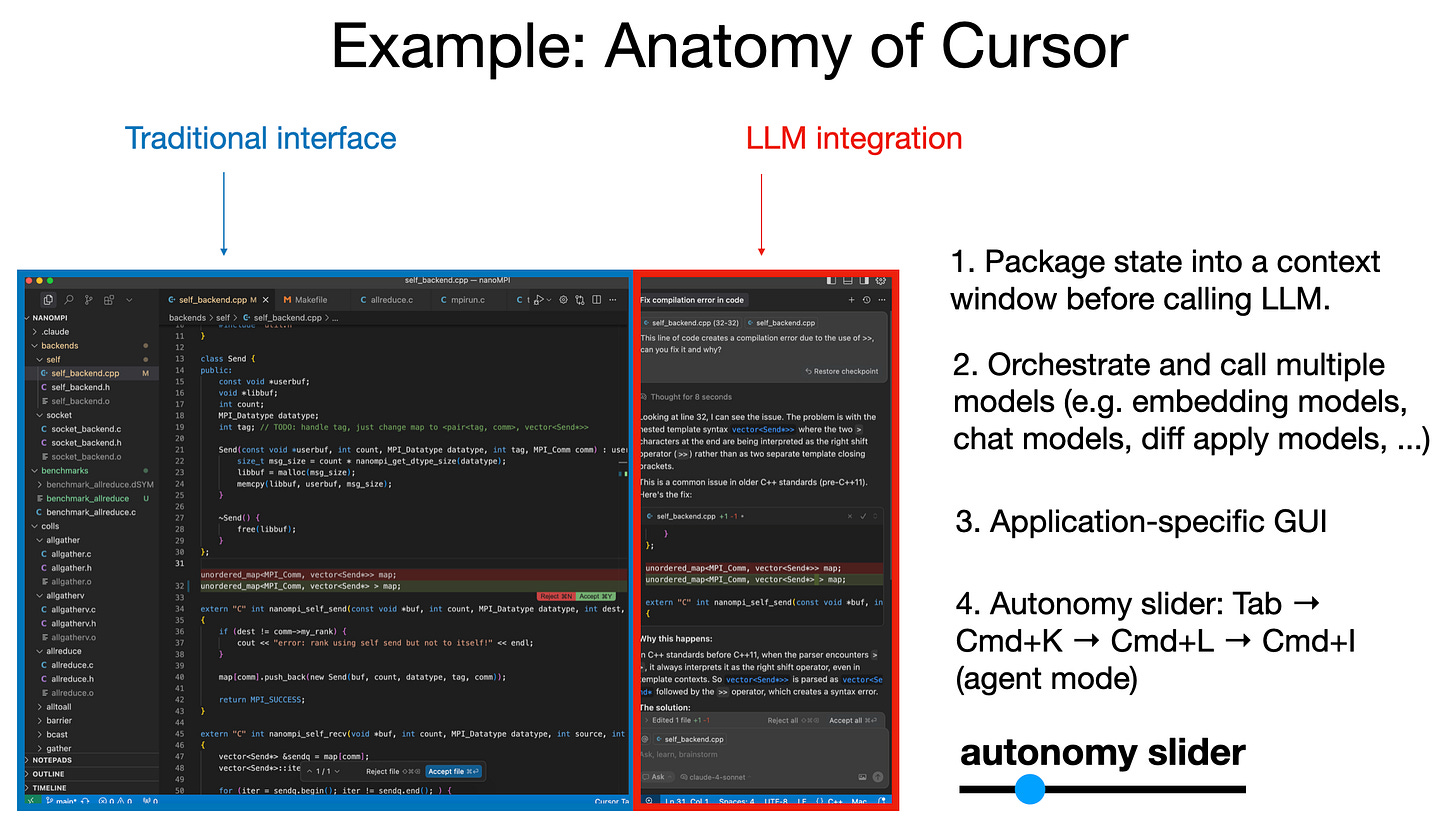

Cursor is the perfect example. You can use simple tab completion (minimal AI involvement), select code and request specific changes (moderate autonomy), or let the AI rewrite entire files (maximum autonomy). The user stays in control of the trade-off between speed and risk.

This approach works because it acknowledges a fundamental truth: humans are still the bottleneck in the verification process. I don't care if an AI can generate 10,000 lines of code instantly – I still need to review it, understand it, and take responsibility for it. The key is making that verification process as efficient as possible.

Infrastructure Transformation: Building for Agents

While everyone's debating AGI timelines, a quieter revolution is happening: the infrastructure layer is being rebuilt for AI consumption.

Think about it: we now have three distinct categories of digital information consumers.

Humans using GUIs;

Programs using APIs;

And now, AI agents that need something in between: programmatic access with human-like flexibility;

This creates massive opportunities for companies that recognize the shift. Every piece of documentation, every API, every digital interface needs to be reimagined for AI consumption. The companies doing this first are gaining significant competitive advantages.

Stripe and Vercel are already converting their documentation to LLM-friendly markdown formats. Some companies are adding llm.txt files to their domains – simple, readable descriptions of what their services do. Others are replacing every "click this button" instruction with equivalent API calls that agents can actually execute.

But here's what most people miss: this isn't about replacing human interfaces. It's about creating dual interfaces. One optimized for human consumption, one optimized for AI agents.

The companies that figure this out first will dominate their markets. Because as AI adoption accelerates (and it will) the businesses with AI-native infrastructure will have massive advantages in speed, efficiency, and capability.

How to Survive the Software 3.0 Era

The transformation is happening whether you're ready or not. Here's your survival guide.

Master All Three Paradigms

Don't pick sides. Software 1.0, 2.0, and 3.0 aren't replacing each other – they're layering on top of each other. You'll need traditional coding for performance-critical systems, neural networks for pattern recognition, and LLM prompting for flexible automation. The professionals who understand when to use each approach will dominate this transition.

Build Iron Man Suits, Not Robots

Stop chasing fully autonomous agents. They don't work reliably, and they won't for years. Instead, build augmentation tools with autonomy sliders. Let users control how much they delegate to AI. Focus on making humans more capable, not on replacing them entirely.

Optimize for Speed of Verification

The bottleneck isn't AI generation – it's human verification. Design interfaces that make it trivial to audit AI output. Visual diffs, clear approval workflows, granular control. The faster humans can verify AI work, the more valuable your product becomes.

Dual-Interface Everything

Every product needs two interfaces now: one for humans, one for agents. Add LLM-friendly documentation. Replace "click here" with API calls. Make your systems programmatically accessible through natural language. The companies that do this first will capture the AI-native market before their competitors even understand what's happening.

The Bottom Line

We're slowly rewriting the entire software stack. That means unprecedented opportunities for those who adapt quickly and massive risks for those who don't. The next five years will separate the companies that understand this transformation from those that become irrelevant.

Choose wisely.

🔎 Explore more: