What is Artificial General Intelligence, or AGI? And How Close Are We to Singularity?

AI minds, aliens, and the future of existence? Buckle up for a wild ride into the understanding of Technological Singularity and AGI concepts

Hello everyone, and welcome to another futuristic episode of Tech Setters, where we discuss the technology, science, and the future trajectory of our world! Today, we're going to dive into some seriously mind-bending stuff – Artificial General Intelligence, or AGI, and something called the technological singularity. But let's start by thinking about... well, thinking itself.

The Space of Possible Minds

Let’s start from a simple idea – our minds, as complex and wonderful as they are, represent just a single point in the vast space of possible minds. This space isn't limited to us and our animal friends on Earth. It extends to potential extraterrestrial life forms and, closer to home, the AI we're developing.

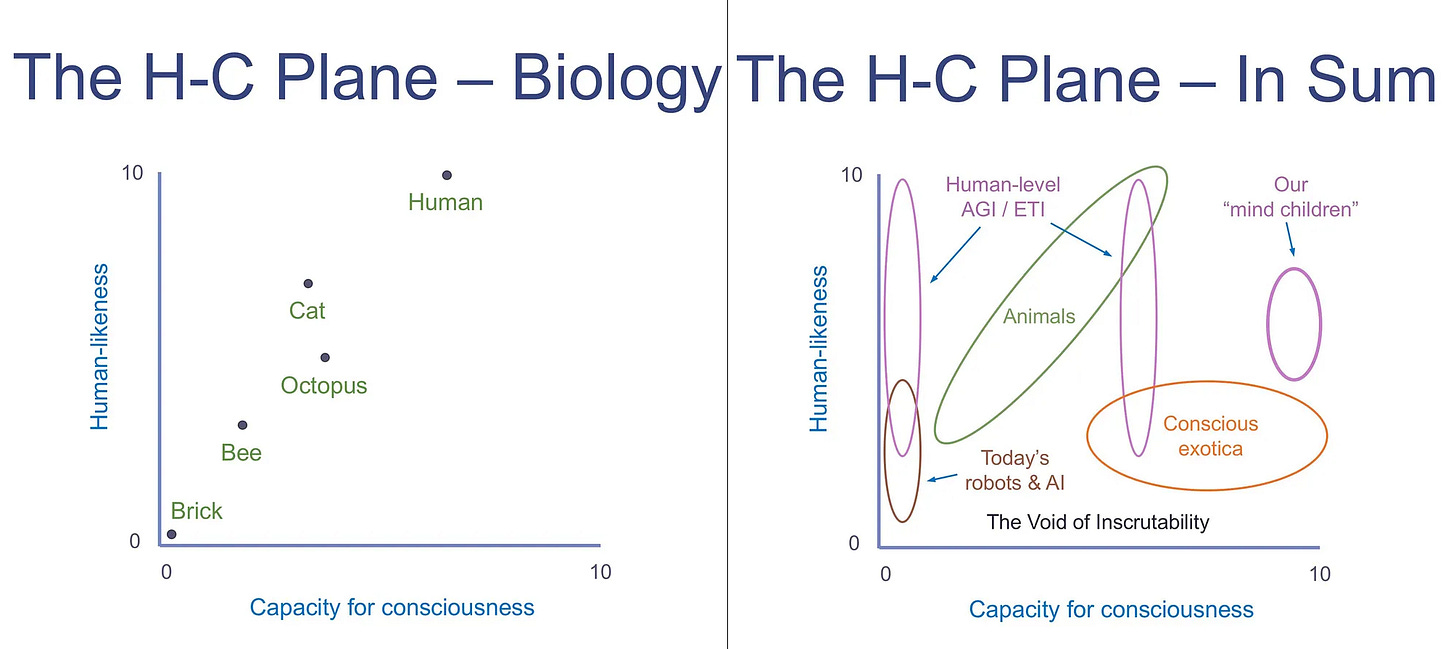

The quest to map out this space of minds takes us on a journey through two dimensions: consciousness and human-likeness. We're pushing the boundaries of what we understand about consciousness, challenging the notion that it's an exclusively human trait. Could a machine or an alien life form possess a form of consciousness so alien that we wouldn't even recognize it as such? It's a question that tickles the imagination and challenges our deepest philosophical beliefs.

Reflecting on whether people can understand a mind radically different from ours, DeepMind's senior researcher, professor Murray Shanahan, described the space of possible minds in two dimensions: the agents' capacity for consciousness and their similarity to humans:

The capacity for consciousness of an agent corresponds to the richness of experience it is capable of;

However, since science is yet unable to measure this metric, Shanahan evaluates it based on the level of cognitive abilities, closely related in terrestrial biology to consciousness: if a brick is 0, and a human is 10, then a bee is 2, a cat is 5, and an octopus is 5.5;

The AI to AGI Revolution

Today's AI's are amazing, but they're experts in one narrow area. They might be champs at specific tasks, like dominating the Chess board, but they lack the general intelligence that humans possess – the ability to adapt and thrive in an endless array of environments.

AGI is the next level. It's about machines that are as flexible and adaptable as we are – the kind that could excel at just about anything.

Now, depending on how these AGI systems are built, they could end up anywhere on our mind map. We could end up with AGI that has a kind of awareness, maybe even consciousness, but vastly different from our own, so different that they challenge our very understanding of consciousness. Is it something that can exist in forms vastly different from our own, in entities that don't see, hear, or feel the world as we do? This topic we partially covered in one of our previous discussions:

So, here's an overview of the various potential forms AGI could take, particularly in terms of its capacity for consciousness (refer to the picture above positioning “mind children” on the consciousness map):

The Zombie AGI: Super-smart, but not truly conscious. Imagine a super-powered domestic vacuum-cleaning robot that could handle anything a human servant could;

Conscious AGI: This is where things get really complicated. AGI could develop minds with different levels of consciousness, even some that surpass our own;

Beyond Human: Some AGI systems might be so far removed from anything we understand as "human-like" that we can barely comprehend them. Imagine an AI as alien to us as the intelligent ocean from the novel Solaris.

The implications of encountering or creating such minds are profound. They stretch far beyond science and technology into the moral and philosophical. How do we treat a being that might possess a form of consciousness we barely comprehend? Should AI have rights of its own? The development of AGI and our potential encounters with conscious AI will challenge us to expand our moral circle beyond the familiar. It will push us to consider the rights and ethical treatment of beings that might think, feel, and experience the world entirely differently than ourselves.

While we're not there yet, the journey towards AGI is filled with fascinating possibilities and difficult questions. Achieving human-level artificial intelligence is a central goal of AI research, and researchers are exploring many paths. Some seek to replicate the structure of the human brain, while others aim to develop entirely new architectures. Only time will tell which paths will lead us closer to machines that rival, or even exceed, our own intellectual capabilities.

The Technological Singularity Hypothesis

The "technological singularity" (or simply "the singularity") is a theoretical future moment when the pace of technological innovation reaches a critical point, where technological growth becomes uncontrollable and irreversible, leading to a whirlwind of change that permanently redefines human society and existence. Progress will no longer be measured in years or decades, but in moments of explosive transformation.

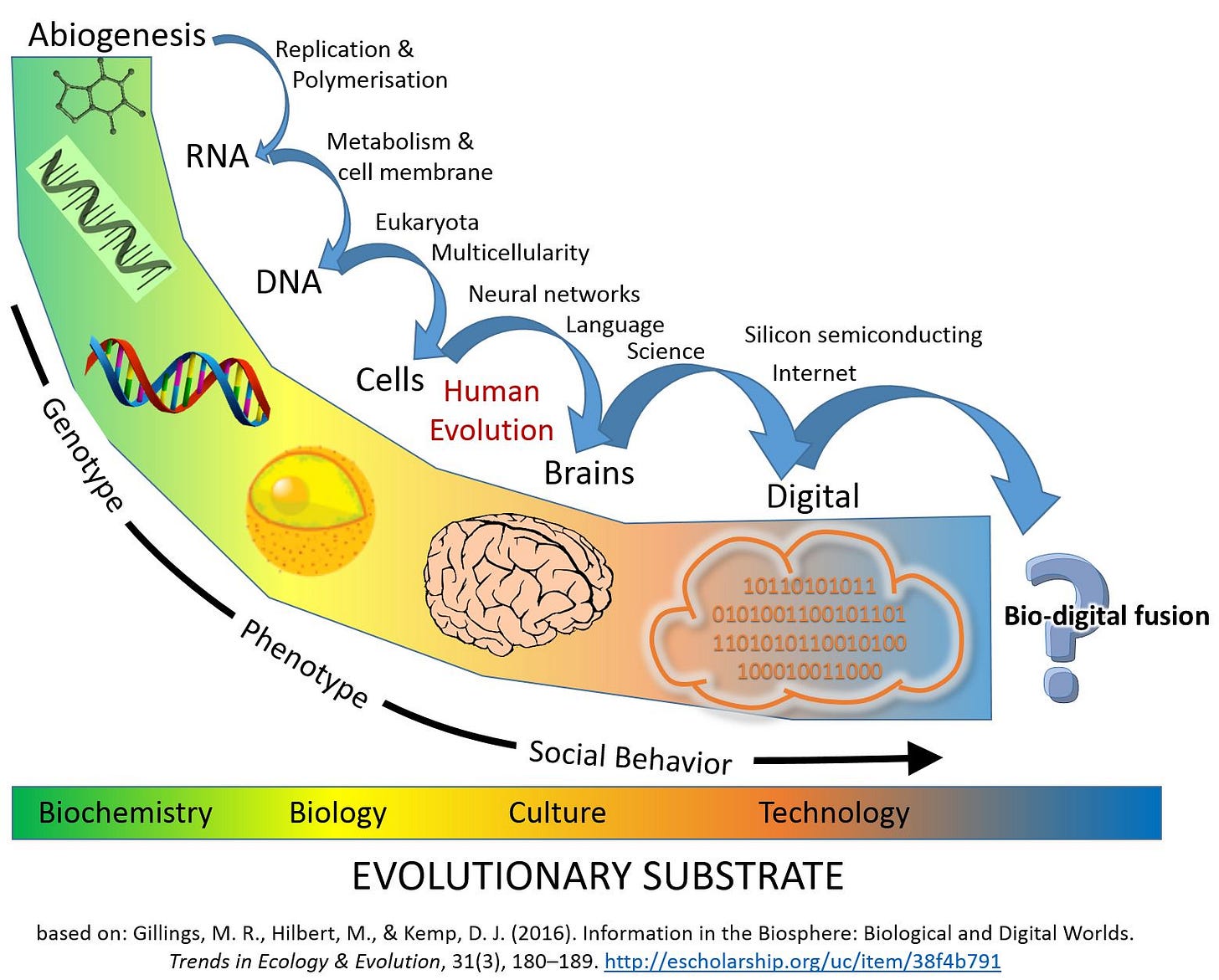

Evolution seems to reward complexity. Our own brains, those complex biological computers with billions of neurons and trillions of connections, are a testament to this process. Many neurobiologists claim it to be the most complex object in the known universe. Yet, evolution doesn't discriminate between natural or artificial systems. What happens when we create something capable of outsmarting its creators?

This is where the "intelligence explosion" theory comes in, and it connects directly to the quest for AGI. In such a scenario, a regularly updated (through numerous iterations) intellectual agent will enter into an unrestrained reaction of cycles of self-improvement, which will eventually lead to the emergence of a powerful superintelligence. Our understanding of the world, hard-won over millennia, might seem simplistic and childlike in comparison. If the alignment of these powerful AI systems isn't carefully considered, the consequences could be profound. You can find more on the problem of alignment of AI systems in our previous discussions:

The origins of the idea Singularity

The Hungarian-American mathematician and physicist John von Neumann was the first to apply the mathematical concept of "singularity" to a technological context. In a discussion with Polish-American mathematician Stanislaw Marcin Ulam, they observed the acceleration of technological progress, suggesting it would lead to a point where human development could no longer match the pace of technological advances – technology would outpace human evolution.

This concept, known in mathematics as a "singularity," was thus extended to describe a pivotal moment in human history, marking a transition where extrapolating past changes becomes meaningless due to the unprecedented nature of technological advancement. The idea of singularity, initially rooted in mathematics, was later embraced by transhumanists, science fiction writers, and futurists to reflect on humanity's future.

In 2004, the concept of the Snooks-Panov Vertical emerged from the independent research of physicist Alexander Panov and biologist Graham Snooks. Panov analyzed the entire history of Earth, uncovering phase transitions within the global ecosystem that were followed by qualitative leaps in development. He found that the time intervals between these transitions decrease exponentially, suggesting a point he termed "singularity." This mathematical term, also used by John von Neumann and Marcin Ulam, gained a more futuristic interpretation similar to Vernor Vinge's earlier predictions.

It’s important to note, whether the singularity is inevitable, or even possible, still remains a subject of intense debate. While AI has made stunning advances, true artificial general intelligence (AGI), a versatile intelligence comparable to our own, remains elusive. Critics argue that there may be fundamental limits to the advancement of intelligent machines. Furthermore, even if an intelligence explosion were to occur, the consequences are too way unpredictable. Nobody can accurately model the behavior of a vastly superior intellect.

When Will the Singularity Arrive?

Now that we've grasped the idea of the technological singularity and Artificial General Intelligence (AGI), the burning question remains: is this hypothetical future inevitable? The truth is, there's no simple "yes" or "no" answer. However, most experts agree that the creation of AGI is inevitable. This belief is supported by the rapid progress we've already seen in AI development.

Panov's important conclusion was that the humanity would reach the singularity around the year 2050. While the precise nature of the predicted singularity remains open to debate, the core idea is that human society and technology may undergo a rapid and unprecedented transformation around the middle of the 21st century.

In 2019, 32 AI experts participated in a survey on AGI timing:

45% of respondents predict a date before 2060;

34% of all participants predicted a date after 2060;

21% of participants predicted that singularity will never occur;

It's also worth noting that AI entrepreneurs tend to hold a more optimistic outlook than researchers.

Rather than fixating on specific dates, it's more useful to focus on the ongoing advancements in AI and how they are shaping our world. By observing the emerging trends and the incredible new capabilities that are constantly appearing, you can form your own informed decision about the potential impact of the singularity and AGI.

The AI Revolution Is Happening (And It's Fast)

Okay, I know we talk about this stuff a lot, but seriously, you won't believe what AI is doing now. It's like every month there's some new thing that just blows your mind. Forget about last year, let's just take a look at what's happened in the last few months – it's getting a little crazy out there!

AI is learning to see the world through the eyes of a child

This could be the first step towards creating "digitants" – digital beings that learn in a uniquely human way. While the inner workings of both human and large language model AI remain somewhat mysterious, we do understand the basics of how children acquire language. They watch, listen, and associate words with the world around them.

A breakthrough study, "Grounded language acquisition through the eyes and ears of a single child," introduces a new AI learning method inspired by this childhood process. This work has the potential to be a revolution in our understanding of language, learning, and cognition.

Previously, AI models were trained on massive datasets of text and images. They learned through complex pattern recognition, a far cry from the way children experience the world.

The new model, called Contrastive Learning (CVCL), learns like a child. Imagine it inhabiting the body of a small child (6 to 25 months old). Through a camera and microphone, it sees and hears the world much like the child does.

Astonishingly, the model was fed just 1% of the video and audio experienced by the child (roughly 60 hours and 250,000 words). After training, it was able to identify a significant number of words and concepts present in the child's everyday life and could even generalize some words to new visual examples.

This study shook the AI community. Initially skeptical, one peer reviewer admitted the work completely transformed their perspective.

This breakthrough opens doors to exciting possibilities:

Expanding Learning – CVCL learning could move from still frames to episodes over time, and from transcripts to raw sound for a richer experience;

From Fragmentation to Full Immersion – The model could eventually learn from a child's full, continuous stream of experience;

Embodied Digitants – We could materialize these models into digitants – embodied, active, and responsive to their environment;

Gemini Pro 1.5: AI Leaps Forward with Childlike Vision

One of the most astounding breakthroughs is the release of Gemini Pro 1.5. This upgraded model boasts an enormous memory context and unlocks remarkable new visual capabilities. It's a significant step towards building AIs mirroring the way children learn.

Remember how we just discussed the CVCL model opportunities? It learns by watching and listening to the world like a child, associating words with objects it sees. Gemini Pro 1.5 expands upon this in an incredible way. You can now feed it a short video, and the model will analyze the visuals to identify objects or extract complex information.

To illustrate this power, let's look at a recent showcase by Simon Willison. He filmed a short seven-second video of one of his bookshelves. Gemini Pro 1.5 wasn't just able to analyze the video and provide a list of book titles, it did so even with partially obscured titles and blurry images! This level of visual comprehension was previously unthinkable for AI. It demonstrates that, by breaking videos down into individual frames, AI can process the world in a way surprisingly similar to how we, people, might analyze it.

Gemini Pro 1.5's massive 1M context window is more exciting because it hints at overcoming limitations seen in human memory. Our brains, however impressive, don't maintain perfect recall. Older memories fade, and details blur. AI models, on the other hand, can potentially store and recall information without such degradation. This allows AI to build an increasingly rich base of knowledge, much like how our own experiences expand our understanding over time.

Also important to note is that AI doesn't only draw information from its vast memory; it also can analyze and reason across various inputs (images, videos, text) in conjunction with its knowledge base. With such tools AI can now "see" the world a bit more like we do, extracting essential details from everyday life. This is a breathtaking step towards those "digitants" – digital beings that learn like children, building their understanding from the ground up.

A Beginning of New Era

Okay, let's cut through the fancy jargon for a second. Forget about all those complex definitions of the singularity. You can actually feel it coming, right? It's that sense that everything important in a field is happening right now.

Just take a look at what Sam Altman, the CEO of OpenAI, said recently: "Scaling laws are decided by god; the constants are determined by the members of the technical staff." Sounds a bit intense, huh? Well, the crazy progress we've seen in AI this past week proves he's not exaggerating.

Big AI companies might be downplaying this whole thing to avoid freaking everyone out, but those of us lucky enough to play with models like Gemini 1.5 Pro know what's up. We're seeing stuff that blows our minds, and it raises some big questions:

Are we witnessing the birth of a whole new kind of intelligence on Earth? Like, a "digital mind"?

Okay, maybe this isn't some familiar, human-like intelligence. It's totally alien, maybe even more different from us than a brain of octopus!

Even without a body, these digital AI things are so good at mimicking us, they make us question what even counts as "consciousness" anymore!

Thank you for joining me on Tech Setters for this enlightening exploration. I don't know about you, but I'm incredibly excited (and a little bit nervous!) to see where this all leads. So, where do we go from here? Yeah, the singularity might be a ways off, but this is a huge deal – and it's happening right now. Until then, stay tuned and stay aware!

🔍 Explore more: