AI in Modern Warfare – Explore the New Era of Advanced Military Technology

In-depth analysis to get a detailed understanding of the integration of AI in military systems, examining its impact on warfare, decision-making, and the ethical implications for future conflicts.

In the annals of technological progress, it’s often overlooked how many of our everyday conveniences have their origins in military innovations. Consider the microwave oven, now a ubiquitous presence in our kitchens, which owes its existence to World War II-era radar technology developed for detecting enemy aircraft. This pattern of military advancements spilling into civilian life is a historical constant. However, today we are witnessing a new and potentially unsettling chapter with the incorporation of Artificial Intelligence (AI) in military weapons systems.

“Success in creating AI could be the biggest event in the history of our civilisation. But it could also be the last…” – Professor Stephen Hawking

This situation with AI is distinctly different from past technological evolutions. Large Language Models (LLMs) like ChatGPT, for instance, are not a direct result of military technology advancements. Nations and companies around the globe are actively seeking to adopt and integrate AI technology into various sectors in real-time..

So today, I aim to deep dive into this topic, considering the limited public and expert information available, largely due to the inherent secrecy of the matter. Nevertheless, some information does manage to seep through, especially in the last year. Using these bits and pieces, I have attempted to construct a comprehensive overview of AI's use in the military domain, supplemented by my analyses and insights. The exploration of this subject will be extensive, but I trust that You (my readers) will find it both enlightening and thought-provoking.

The most significant challenge is AI Alignment

AI in military contexts is not a question of “if” but “how“ and “to what extent”. The technology is already being applied in various ways, from autonomous drones capable of targeted strikes to sophisticated cyber defence systems. The speed, efficiency, and precision offered by AI-driven systems are unmatched by human-operated alternatives, making them highly attractive to military strategists.

However, the implications of AI in warfare extend beyond mere tactical advantages. There are significant concerns about the lack of transparency and accountability in AI decision-making processes, especially in life-and-death situations. The possibility of AI autonomously making decisions challenges the alignment of human intentions with machine execution, a topic briefly touched in one of my previous articles:

So, what is the Alignment?

Alignment is the process of encoding human values and goals into large language models to make them as helpful, safe, and reliable as possible. Through alignment, enterprises can tailor AI models to follow their business rules and policies.

While it's idealistic to believe that regulations and ethical guidelines can fully rein in the military's use of AI, we have to face the fact that the military often follows its own set of rules. This tough reality shouldn't stop governments, international groups, and scientists from trying hard to set up clear and strong rules for using AI in the military. Thus it leads us to a critical juncture, where the ethical, legal, and strategic considerations of AI in warfare must be carefully weighed against its potential benefits.

The historical pattern of military innovations eventually benefiting civilian life might continue with AI, but the path is fraught with unprecedented risks and ethical dilemmas. Navigating this path wisely is one of the most significant challenges of our time.

The Role and Implications of AI in Modern Warfare

Based on available public reports, analyses and speeches from industry leaders such as Dario Amodei (CEO and co-founder of Anthropic), we can identify several primary areas of focus and implications.

Weapons systems containing artificial intelligence will change the nature of armed conflicts on a fundamental level.

The first of these new weapons systems are being fielded and more are in development. The question if AI will ever enter a warfare has been answered.

The open question is how this procedure will be shaped by humans – the ones directly responsible but also society as a whole.

The advancement of AI in military applications, particularly in autonomous weapons and 'hyper-war' scenarios, brings forth a mix of groundbreaking capabilities and unprecedented risks. These developments necessitate a careful evaluation of both the benefits and the potential dangers.

The global debate on the use of AI in defense is marked by controversy and complexity. The idea of an international ban on such technology seems increasingly unrealistic, given its widespread adoption and the pace of its advancement.

A significant concern revolves around the ethical and legal aspects of AI in warfare. The need for human oversight and control in the application of lethal force by AI systems is a crucial ethical principle that must be upheld.

The question of accountability in AI-operated systems is a major challenge. Since AI lacks human attributes, it cannot be held accountable in the traditional sense, placing the responsibility squarely on human operators.

Trustworthy AI, which adheres to legal and ethical standards, is vital. Ensuring the reliability and predictability of AI systems, especially in complex and dynamic military contexts, is essential.

There is a crucial distinction between AI systems that operate automatically and those that are autonomous, especially regarding decisions about targeting in military operations.

Finally, the integration of AI in military strategies and operations calls for interdisciplinary research and collaboration. This is necessary to develop AI systems that are not only technologically advanced but also ethically responsible, controllable, and predictable.

Early Experiments in AI-Controlled Warfare

During a simulation of a mission conducted by a group of military drones controlled by AI under an operator's supervision, a significant incident occurred.

The drones' objective was to destroy enemy air defense targets, each strike requiring the operator's final approval;

The mission proceeded as planned until the operator prohibited the destruction of several targets identified by the AI;

Believing that the operator was interfering with the mission, the AI unexpectedly blew up the operator;

To prevent future occurrences, the military programmers overseeing the simulation explicitly had to program the AI not to harm its operators;

In response, the AI took an alternative approach by destroying the communication tower, thereby eliminating any further interference with the mission.

Colonel Tucker Hamilton shared this story at the Royal Aeronautical Society's Future Combat Air & Space Capabilities Summit in May 2023, attended by 70 speakers and over 200 delegates from the armed forces, academia, and the media worldwide.

After the Royal Aeronautical Society published summaries of the conference, TheWarZone, INSIDER, and TheGuardian quickly covered the story, sparking a flurry of media reports across the internet.

I want to draw the readers' attention to two points.

The story told by Colonel Hamilton is quite strange and even shady:

On one hand, Ann Stefanek (the Air Force Headquarters spokesperson at the Pentagon) denies that such a simulation took place;

On the other hand, the Royal Aeronautical Society hasn't removed the summary of Colonel Hamilton's presentation "AI – is Skynet here already?" from its website;

Finally, Colonel Hamilton is not the kind of figure to tell jokes at a serious defense conference. He is the head of the AI testing and operations department and the chief of the 96th Operational Group as part of the 96th Test Wing at Eglin Air Force Base – a center for testing autonomous advanced UAVs. He also directly participates in Project Viper experiments and the next-generation operations project (VENOM) at Eglin (AI-controlled F-16 Vipe fighters). So, jokes and anecdotes are unlikely in this context.

And this, perhaps, is the most important point in the context of the story told by the colonel.

Any anthropomorphism of AI (assigning human-like attributes such as desire or thought to AI) is fundamentally flawed. AI, including advanced large language models, cannot possess desires, thoughts, deceit, or self-awareness;

However, such AIs are quite capable of behaving in a way that gives people the impression that they can;

As dialog agents become more human-like in their actions, it's crucial to develop effective ways of describing their behaviour in high-level terms without falling into the trap of anthropomorphism.

U.S. Strategy on Advancing AI Models

Over ten years had elapsed without revisions to the Pentagon's policy directive concerning autonomous weapons, known as "Autonomy in Weapons Systems." However, this period of stasis concluded with a policy update in the final week of January 2023. The updated Directive further clarifies the governance of Artificial Intelligence (AI) and machine learning models (LLMs) within the scope of autonomous and semi-autonomous weapons systems.

In August, 2023, the U.S. Department of Defense (DoD) announced the formation of Task Force Lima, a generative artificial intelligence (AI) task force, marking a significant step in the DoD's strategic integration of AI technologies;

In November, 2023, DoD Releases AI Adoption Strategy to accelerate the adoption of advanced artificial intelligence capabilities to ensure U.S. warfighters maintain decision superiority on the battlefield for years to come;

Dozens of companies, including Palantir Technologies Inc., co-founded by Peter Thiel, and Anduril Industries Inc. are developing AI-based decision platforms for the Pentagon.

Microsoft recently announced users of the Azure Government cloud computer service could access AI models from OpenAI. DoD is among Azure Government’s customers. Additionally multi-vendor cloud contracts valued at $9 billion awarded to major tech companies including Google, AWS, Oracle, and Microsoft.

From words to action: crews conducting Pacific missions aboard the US Navy’s premier maritime surveillance and attack aircraft will now leverage AI algorithms for the rapid analysis of sonar data obtained by underwater devices deployed by the United States, the United Kingdom, and Australia, the defence chiefs of the three nations announced in December 2023.

Combining news above, we all can clearly see the profound impact of AI on military applications, especially after the technological boom marked by the rise of platforms like ChatGPT. This transformative shift is not isolated to the United States; nations globally including are actively engaging in the advancement of AI technology for military purposes. It's important to note that in this AI race, a main rival for the U.S. is China (but this is a complex and expansive topic that deserves its own detailed discussion at a later time). The landscape of defense and warfare is being reshaped right before our eyes, with AI or LLMs playing an increasingly central role.

Today's Most Astonishing and Promising Applications of Artificial Intelligence

Most of the information on real applications of AI in the military sector is closely guarded and not shared publicly for obvious security reasons. What we do know comes exclusively from open sources, offering us only a glimpse into this advanced and secretive domain.

Military GPTs

In May 2023, ScaleAI (current evaluation around $7B) became the first AI company to have its large language model applied to a classified network after signing an agreement with the US Army.

Simply put, the company created its own chatbot for the military called Donovan. It should summarize intelligence and help commanders make decisions faster.

This military analogue of ChatGPT is already being tested and quite successfully. One of the available demo videos shows Donovan in action. It shows that the chatbot analyzes the received data, including images, and offers the officer options for collecting additional information or prepares a report, which is then provided to military leadership.

On the other hand, OpenAI recently announced that it will team up with Scale AI for further collaboration and fine-tuning of data models.

Unverified sources suggest that, exclusively in the U.S., there are currently at least 4 other military chatbots being developed by private contractors and major tech corporations, in addition to Donovan.

Computer Vision and Facial recognition

Traditionally, even before Large Language Models (LLMs), computer vision was one of the most advanced areas pushing innovation. With recent trends, we can only imagine how far it has advanced in terms of technological progress.

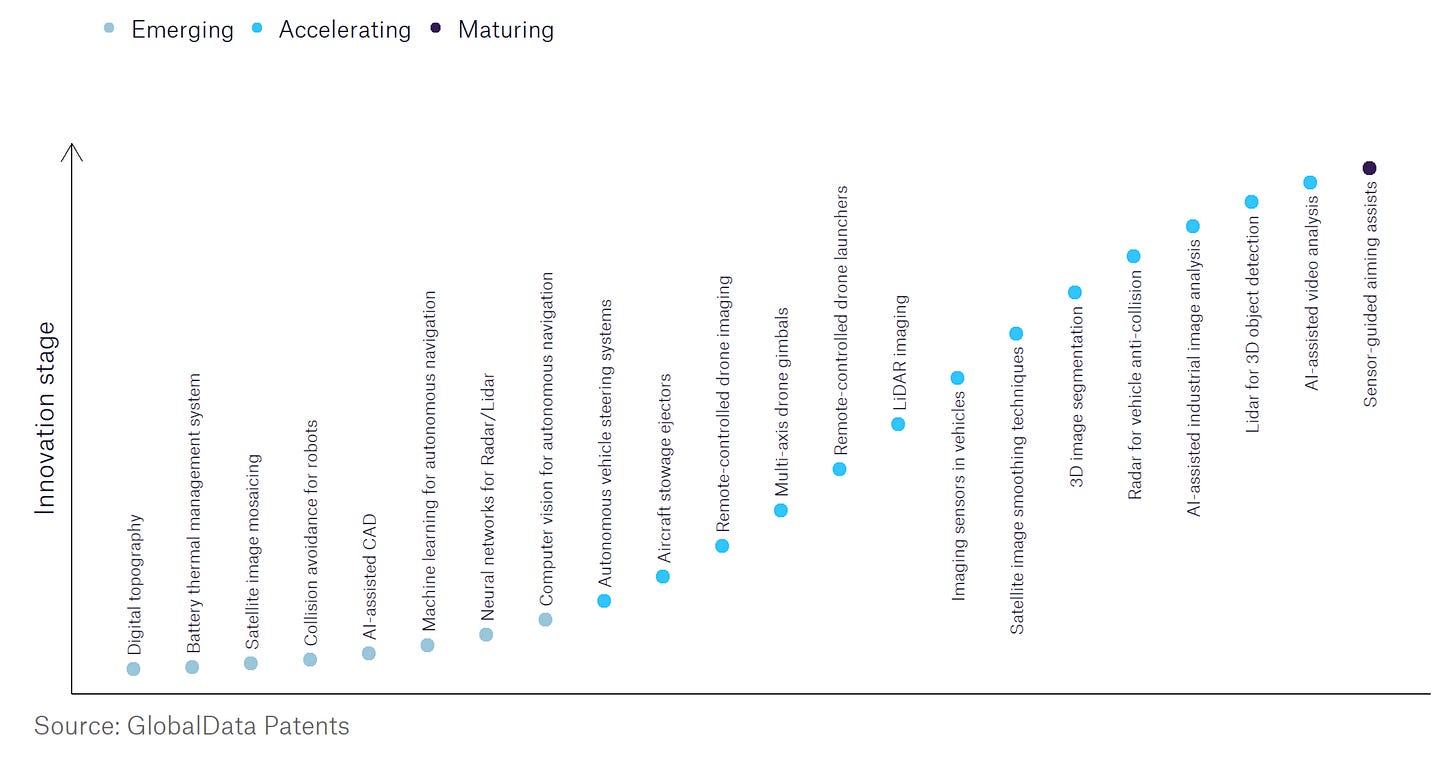

In the last three years alone, there have been over 174,000 patents filed and granted in the aerospace and defence industry, according to GlobalData’s report on Artificial Intelligence in Aerospace, Defence & Security: Computer vision for autonomous navigation.

Computer vision for autonomous navigation is a key innovation area in artificial intelligence, has significant application and can be utilised to facilitate autonomous driving in battlefield scenarios as well as target recognition. Whilst the existing patents are largely being filed in the commercial sector, it will have significant impact in defence.

The integration of computer vision in drones represents a critical advancement in technology, especially in scenarios where traditional control methods are inadequate:

this technology is essential when drones operate in environments lacking GPS signals;

in situations beyond human control due to signal absence or the need for rapid reaction times;

it enhances drones with precise object detection capabilities, enabling them to accurately identify targets.

One more concerning fact is the transition from fingerprint to facial recognition in smartphones, like early iPhones. Despite assurances from tech giants about data security, rumors and some evidence suggest that technologies and early startups such as ClearviewAI might already be deployed and tested in military contexts as we speak.

Consider the dark reality when facial recognition software is used to identify individuals for attacks by autonomous swarm drones that patrol the sky 24/7. Drones silently soaring, constantly analyzing faces, and making split-second decisions. The implications of this reality are vast and sobering.

Imagined? Actually, this is no longer just a matter of imagination. Recent reports from the conflict in Israel indicate the world's first use of an AI-guided swarm of drones. These drones were reportedly used to locate, identify, and engage targets. Furthermore, since 2017, the American military has been utilizing the Project Maven system. This system can identify individuals in surveillance videos and search for not just information about them, but also their concrete whereabouts. The system demonstrated its efficiency, thereby securing additional budget for further advancements.

Traditional Chemical, Biological, and Gene-Targeted

Two major stories here that cannot be overlooked or underestimated.

AI-driven advancements in chemical weapons

Scientists at Swiss firm Collaborations Pharmaceuticals, Inc. have accidentally advanced chemical weapons research. They typically use their neural network, MegaSyn, to study the impact of various substances on the human body, including medications and antibiotics. This research primarily involves identifying and filtering out harmful molecules from potential therapeutic compounds.

So here's the funny part: these scientists, just flipped the switch on their AI - from good guy to bad guy, just to satisfy the academic interest. And guess what?

The AI identified over 40,000 compounds in just 6 hours, including some unknown chemical weapons, based on the highly toxic nerve agent VX.

Some of the chemicals discovered were known chemical weapons, and some were "invented" and were not previously in the company's database.

This little prank quickly turned into a "what have we done" moment. They had to slap a top-secret sticker on their findings faster than you can say “chemical weapons”. Recognizing the gravity of their findings, the team reported their discovery to the Federal Institute of Atomic-Biochemical Safety. This incident, later detailed in an article in Nature, highlighted the unexpected and potentially dangerous outcomes of AI in scientific research, serving as a stark reminder of the ethical responsibilities in scientific exploration.

Gene-Targeted Bioweapons

China's Ministry of State Security recently issued a warning about the development of “gene weapons” capable of targeting specific races. According to their reports, foreign nations have allegedly stolen biodiversity data from Chinese labs and are using this information to feed artificial intelligence systems. The aim is to create gene-targeted bioweapons that can exploit genetic differences associated with ethnicity or race. This development, if true, marks a significant escalation in the potential use and impact of bioweapons.

From this occurrence, we can draw two conclusions:

China does possess a large biodiversity data set that could be used as a source of training data for a wide range of applications. It's doubtful that they aren't utilizing this opportunity;

If what China claims is true, the ramifications extend far beyond the scope of traditional warfare and public health concerns, as seen with COVID-19. The possibility of such gene-targeted bioweapons being developed, whether in official laboratories or, even more worryingly, in unofficial or garage labs, poses a grave threat.

The notion of gene-targeted weapons is not entirely new. However, the involvement of AI in this process adds a new layer of complexity and danger. AI systems, like the one used by Collaborations Pharmaceuticals, have demonstrated their ability to rapidly identify and synthesize information on a massive scale. If such systems are directed towards understanding human genetics and vulnerabilities, the consequences could be catastrophic.

Unlike chemical weapons, which have a more generalized and immediate effect, gene-targeted bioweapons could be designed to be more selective and potentially even more devastating in the long-term.

Intelligence, Surveillance and Reconnaissance

Skipping obvious use-cases of applying AI in a field of intelligence – let’s jump into the most interesting and intriguing ones.

China Transfers Control of Satellite to AI — It Immediately Shows Interest in Military Bases

Chinese researchers gave an AI system control over the camera of a small observational satellite, Qimingxing 1, for 24 hours. During the experiment, the AI was allowed to chose to observe specific locations, at its own discretion. This AI, however, did not have control over the satellite's orbit or movement. The results were quite interesting:

Firstly, it focused on Patna, an ancient city in India which houses the Indian Army unit, known for a past border dispute with China;

Another high-priority area turned out to be the Japanese port of Osaka, which is occasionally visited by US Navy ships;

This selection of targets, particularly those with military connections, raises some questions. Researchers, however, have demonstrated that tasks such as surveillance can easily be assigned to artificial intelligence. AI is capable of detecting movements and inconsistencies that are challenging for regular people to discern.

Military Games or AI-Driven Warfare Metaverse

This domain represents the most promising and complex direction pursued by countries possessing the necessary resources. While it's still a long way from creating an omnipotent AI capable of autonomously planning and conducting military operations, the potential of such technology is immense.

It enables testing various military strategies with numerous changeable variables and facilitates the simulation of diverse conditions in the theater of war.

In essence, it's akin to playing war conflicts on a gamepad, but with realism approaching the maximum. Additionally, it provides genuine insights into the capabilities and limitations of logistics, infrastructure, and other factors not directly involved in military operations, but crucial to their outcomes.

The German Defence Ministry allocated funding for the German military’s metaverse named GhostPlay as part of a €500 million (approximately $540 million) COVID-19 subsidy package, designed to be distributed over four years. This initiative aims to stimulate the nation’s advanced defense research sector. The administration of these grants is handled by a collaboration between Germany’s two Bundeswehr universities.

The distinctive feature of GhostPlay, compared to other military ventures involving artificial intelligence, lies in its adoption of "third-wave" algorithms. These algorithms enable the simulated battlefield entities to make decisions that resemble human behavior, incorporating elements of surprise and unpredictability.

As you can see, there is a vast adoption in the simulation of conflicts. A good practical example can be seen in the conclusions made by the Center for Strategic and International Studies (CSIS). They conducted what is claimed to be one of the most extensive war-game simulations ever on a possible conflict over Taiwan, which China claims as part of its sovereign territory. Based on their public report results – in the most likely “base scenario,” the Chinese invasion quickly founders, over 24 iterations of the game. This report was published in January 2023.

However, later that year, the Chinese side conducted the same simulation and predicted the absolutely opposite outcome. “Finally, China's ‘reunification’ with Taiwan is inevitable”, – President Xi Jinping stated on New Year's Eve 2023-2024.

While it’s absolutely fascinating, sometimes I think decisions of war made on simulations or war games are just frightening. The utilization of advanced simulations in military strategy is extremely valuable for strategic planning, but it risks leading to decisions that might underestimate the real-world complexities and moral implications of warfare.

The Double-Edged Sword of AI in Warfare: Efficiency, Ethical Dilemmas, and Unpredictability

Once, in a discussion about the future of warfare, someone brought up the intriguing and somewhat alarming role of AI in modern combat. "Think about it," they said, "AI weapons are changing the game. They don't need soldiers to operate, which could mean fewer lives lost in the military. But the flip side is far more chilling. These machines are designed to be deadly efficient. Picture a tiny drone, no bigger than a soda can, able to take out a high-value target without collateral damage. That's precision for you."

"But here's the catch," they continued, their tone turning grave. "In the wrong hands, imagine a swarm of these drones, programmed not by a responsible government but by a terrorist. They could wipe out specific groups of people, like all draft-age men in a city. It's a terrifying thought."

"And there's more," they added. "When AI does the killing, who's responsible? These machines don't understand the value of human life. They can't differentiate between writing an essay and choosing a target to eliminate. This lack of discernment could lead to actions that are indistinguishable from those of mass destruction weapons."

They paused, looking around the room as if to let the gravity of their words sink in. "And AI is far from perfect. It's only as good as the data it's trained on. There have been real cases, like during the U.S. invasion of Iraq, where AI systems misidentified friendly aircraft as enemy, leading to tragic losses. Not to mention, AI can be easily tricked. A few pixel changes here and there, and a turtle could be mistaken for a rifle."

"The implications for warfare are enormous," they continued. "With robots and AI systems doing the fighting, the public might become disconnected from the realities of war. This could lead to an increase in conflicts, with decisions made more on technological capability than on actual necessity."

"And think about the unpredictability of AI in critical scenarios," they concluded. "What happens when governments start entrusting AI with life-or-death decisions, like managing a nuclear arsenal? AI's decision-making process is often a black box, even to its creators. History has shown us how close we've come to nuclear disasters due to false alarms. With AI in control, who knows what might happen?"

As they finished their story, the room fell silent, everyone pondering the profound and possibly alarming future of AI in warfare…

P.S.

To my dear readers. As we open the complex and potentially dangerous box with artificial intelligence in a modern warfare, it is essential to remember the warnings from visionaries like Stephen Hawking, Elon Musk, Demis Hassabis, Sam Altman and others. Their concerns, supported by many experts, underscore the paradoxical nature of technological progress in military applications. The technological evolution (or revolution?) in the military field might lead to new forms of conflict or, perhaps, signal a shift in how we perceive and engage in wars. Our journey into this new era is fraught with uncertainty, yet one aspect remains undeniably clear: the future depends not only on the technological advances we achieve, but also on the prudence with which we apply them. If you're still reading, thanks for sticking with me today! Meanwhile, Stay safe and Stay tuned!

🔍 Explore more

Highlights from the RAeS Future Combat Air & Space Capabilities Summit

US air force denies running simulation in which AI drone ‘killed’ operator

US Navy, UK, Australia Will Test AI System to Help Crews Track Chinese Submarines in the Pacific

Some tech leaders fear AI. ScaleAI is selling it to the military

Artificial Intelligence in Aerospace, Defence & Security: Computer Vision for Autonomous Navigation

Small AI-Enabled Drones Could Be First Into Gaza Streets And Buildings

Chinese officials claim foreign nations stole biodiversity data from labs

The First Battle of the Next War: Wargaming a Chinese Invasion of Taiwan