AI Safety Report 2025: What It Means for Your Job and Future

AGI is dead – long live General-Purpose AI! The International AI Safety Report 2025 has redefined the future of artificial intelligence, job security, and global risks.

Welcome back to another futuristic episode of Tech Trendsetters! Today, we're probably diving into the most promising moment in AI history, triggered by the release of The International AI Safety Report 2025.

And why this caught my attention?

This could be one of the biggest shifts in how we think about artificial intelligence;

And more importantly, it’s set to impact something that matters to all of us – our incomes, job security, and the future of human existence.

Until recently, we all lived in a world where there was only one higher intelligence – human intelligence. For decades, the idea of a second, non-biological higher intelligence, often referred to as Artificial General Intelligence (AGI), has remained a futuristic concept (of course except for you, me, and everyone who follows Tech Trendsetters).

Some said AGI would arrive in 5 years. Others speculated it could take fifty years or more. Turns out, the big AGI debate is officially over. Not because we finally built AGI, but because all serious AI experts have decided to throw the whole term in the trash where it belongs.

The Dawn of a New AI Era

Something extraordinary is about to happen in Paris. On February 10 and 11, 2025, the Grand Palais will host what might be the most significant gathering in AI history – the Artificial Intelligence Action Summit. It might be just another tech conference, but I don’t think so. We're talking about an unprecedented assembly of global leaders: heads of state and government, international organization chiefs, CEOs from both tech giants and startups, leading academics, NGO representatives, and even artists and cultural figures.

What makes this summit particularly significant is its foundation. As “food for thought” for its participants, a 297-page International AI Safety Report 2025 was published yesterday by a group of 96 global AI experts, including Nobel laureates and Turing Award winners, and supported by 30 countries along with the OECD, UN, and EU.

The path to this summit has been carefully laid out through previous gatherings at Bletchley Park in November 2023 and Seoul in May 2024, what I think is building momentum for this crucial moment in AI history.

So, AGI is Dead. What Now?

Let’s get real here. The term Artificial General Intelligence (AGI) has been stretched, abused, and redefined so many times that it now belongs in the same category as UFO conspiracy theories and crypto schemes. The International AI Safety Report 2025 finally puts it to rest.

Instead, we now have a cleaner, more grounded term: General-Purpose AI (GPAI).

Why? Because:

GPAI has a clear and testable definition – Unlike AGI, which has been a philosophical playground, GPAI refers to AI systems that can independently perform or assist users in solving a wide range of tasks, from creating texts, images, videos, and audio to executing actions and annotating data.

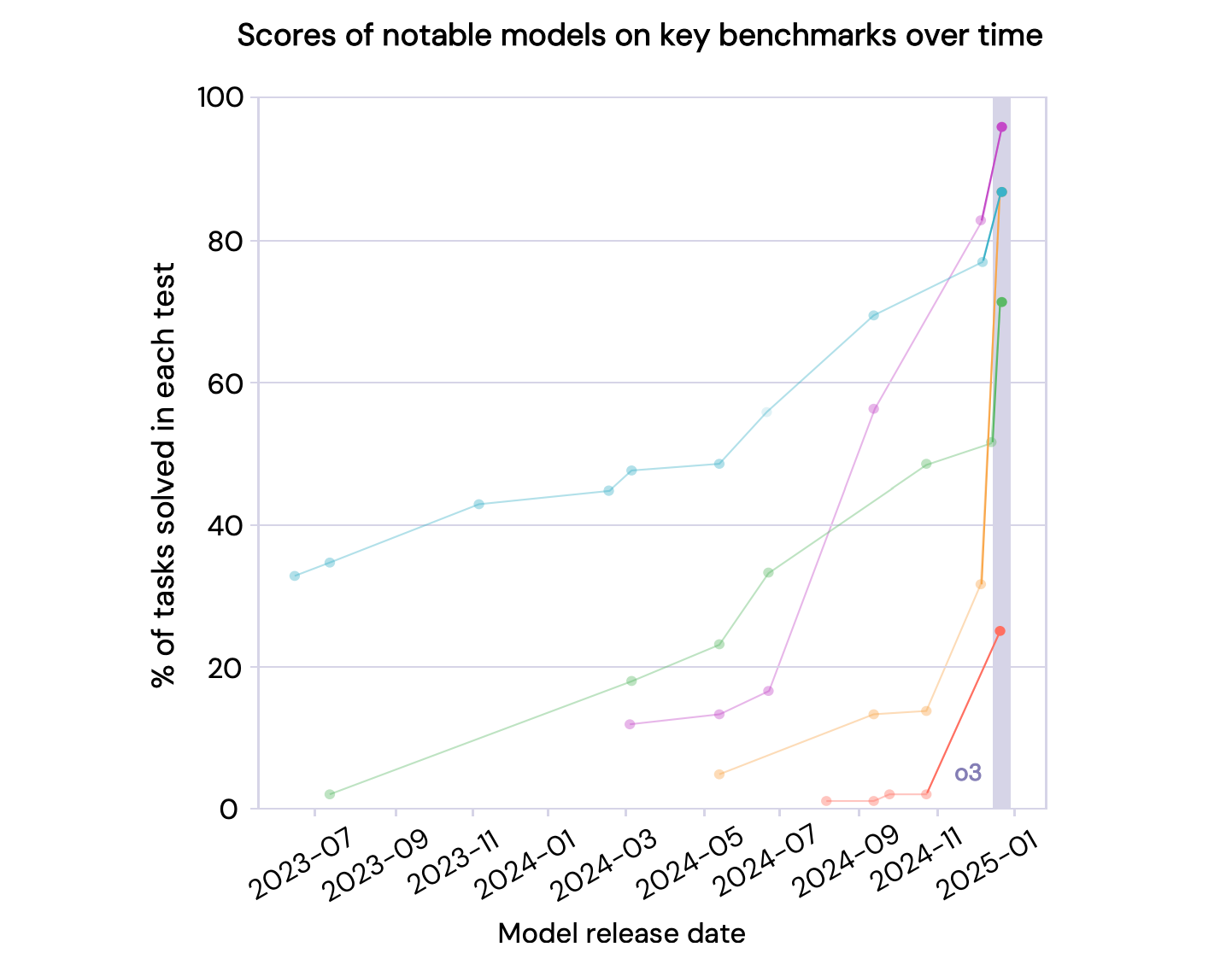

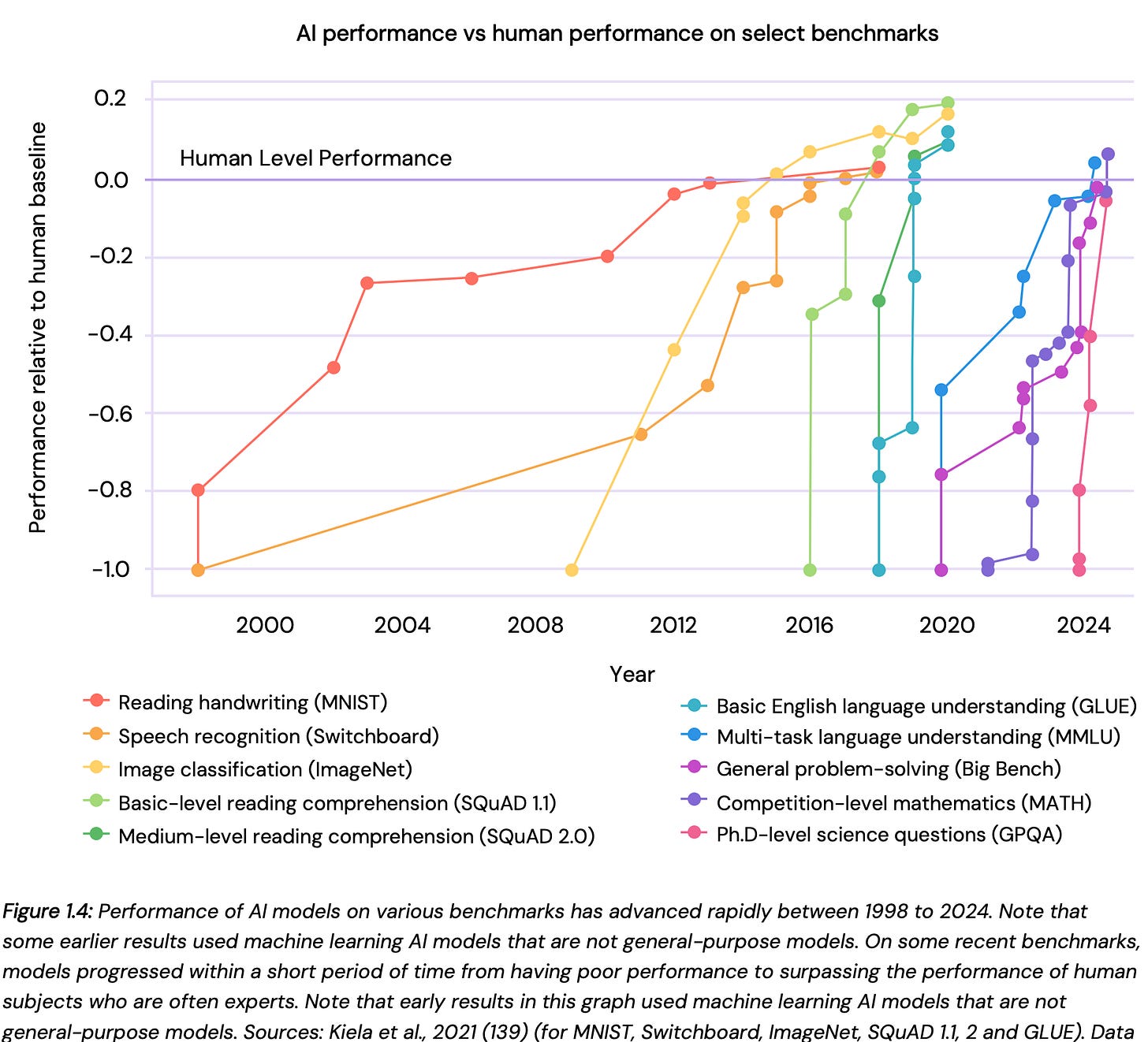

GPAI already exists – No more waiting for a hypothetical future. The best frontier AI models (hello ChatGPT, DeepSeek and Gemini) are already demonstrating intelligence levels that, in many cases, surpass top human experts in key assessments.

GPAI is advancing at a scary-fast pace – The AI Safety Report lays out some interesting statistics. If recent trends hold, by 2026, some AI models will be trained with 100x more computational power than today’s most advanced models. By 2030, we could be looking at a 10,000x increase in AI training compute. At that point, even the most skeptical researchers might have to admit that the AGI finish line has already been crossed.

Welcome to the Era of Two Higher Intelligences

From now on, humanity (happily or unfortunately) coexists with two general intelligences:

Human intelligence – Still top-tier (for now);

General-purpose AI: – Rivals us humans;

If you’re wondering, "Does this mean AI is about to take over the world?", the real answer is: When. And even more real question is: "What happens next?".

What We Need to Worry About (and What’s Overhyped)

If you’ve read The International AI Safety Report 2025, you’d know that a significant chunk of it is dedicated to risks. Of course, that’s expected – whenever you create something that might be smarter than you, risks are part of the deal.

That said, let’s cut through the noise. Not every risk in the report is equally urgent (at least as I see it). Sure, AI might pose systemic risks, such as disruptions to labor markets and other sectors, but these are not the existential threats that should keep us awake.

For me, two risks stand out above the rest:

AI-assisted biological weapons and warfare – Because, well, survival matters.

AI-driven bias – Because I can clearly see that nowadays, many people are replacing Google and the entire systemic education approach with AI. Having a biased AI, however, can significantly shape one's worldview. That would be unfortunate.

Bioweapons and AI in Warfare

As I told before, there are AI risks that are annoying, there are AI risks that are expensive, and then there are AI risks that could wipe us out.

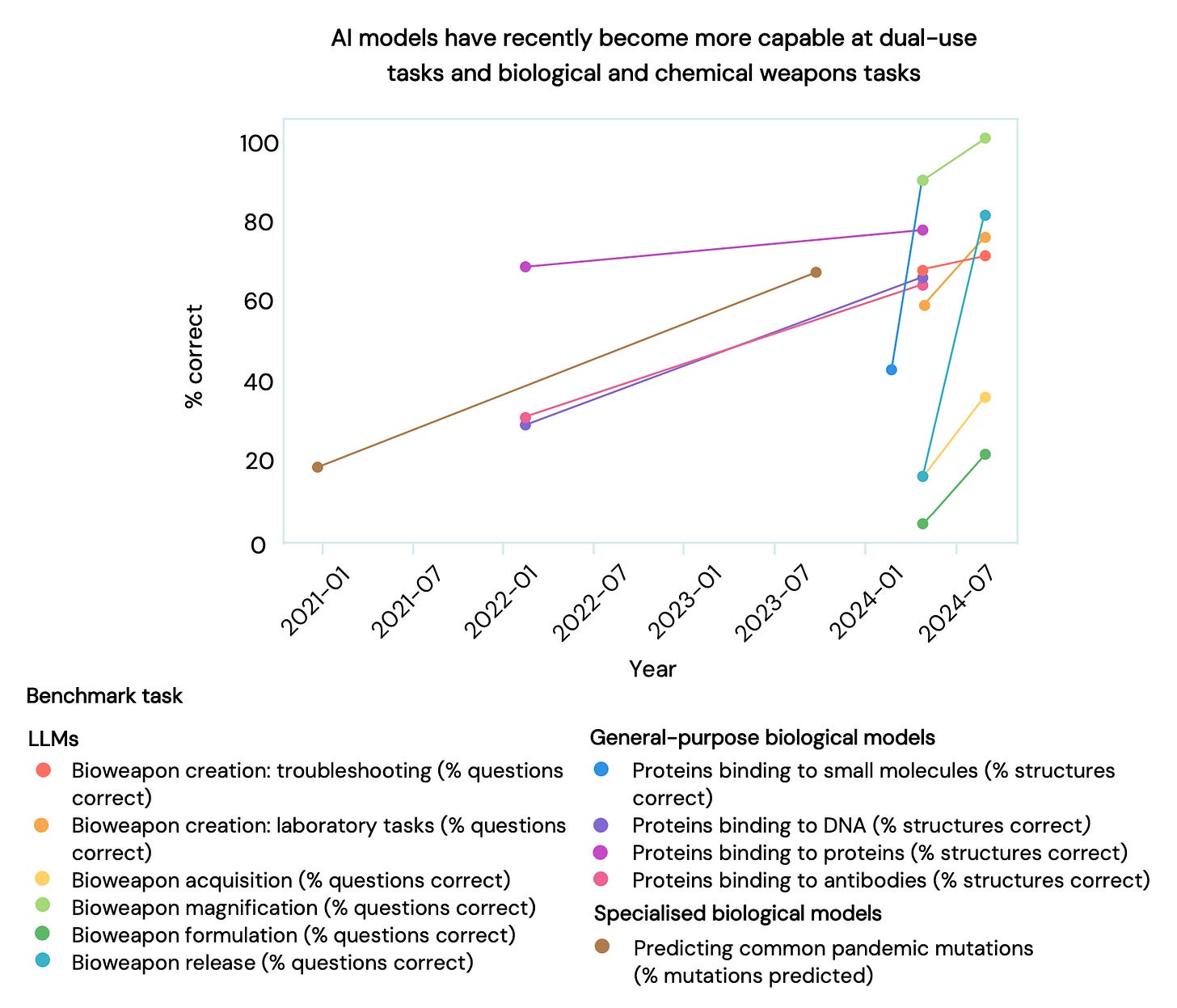

The Report reveals new findings:

AI models are already capable of generating instructions and troubleshooting steps for producing known biological and chemical weapons.

LLMs can now provide detailed, step-by-step plans for creating chemical and biological weapons, improving on plans written by people with a relevant PhD

AI's ability to design highly targeted medical treatments has increased substantially since the Interim Report, and chat interfaces are expanding access, also heightening the risk of more potent toxins being created

AI applications in biotechnology are lowering some barriers to the weaponisation and delivery of chemical and biological agents, but these stages remain technically complex

Advances in biological design are occurring rapidly, creating marked uncertainty about future capabilities and risks

To shortly summarise: before AI, creating a bioweapon required years of real human expertise and high-level lab access – now, the gap between what AI can achieve and what a determined group of people can exploit is rapidly shrinking.

AI Bias

This is my second favourite risk — and it’s about control.

If you've been using AI instead of Google for research, you’re not alone. Millions have switched. And if AI is your primary source of information, you’re already being shaped by its biases – whether you know it or not. Actually, in one of our previous episodes, where we dissected the AI reports for 2024, this bias had already been identified.

So back to biases in The International AI Safety Report 2025 – the new findings are:

There are several well-documented cases of AI systems, general-purpose or not, amplifying social or political biases; and some initial evidence suggests that this can influence the political beliefs of users

It is difficult to effectively address discrimination concerns, as bias mitigation methods are not reliable; furthermore, achieving complete algorithmic fairness may not be technically feasible

Even well-trained AI models prioritize engagement over truth – which means they can subtly reinforce ideological echo chambers.

Bias is an interesting, even somewhat philosophical, topic. But what we need to understand is that AI bias isn’t just about what is being said – it’s also about what’s not being said. Think about it next time when you talk to your favorite AI-chatbot.

Is AI Going to Take Our Jobs?

This is the old good question people still discuss near office watercoolers. Even me, at some point, I was thinking about it – what if AI takes my job too? But does it mean I’m out of a job? This part I doubt.

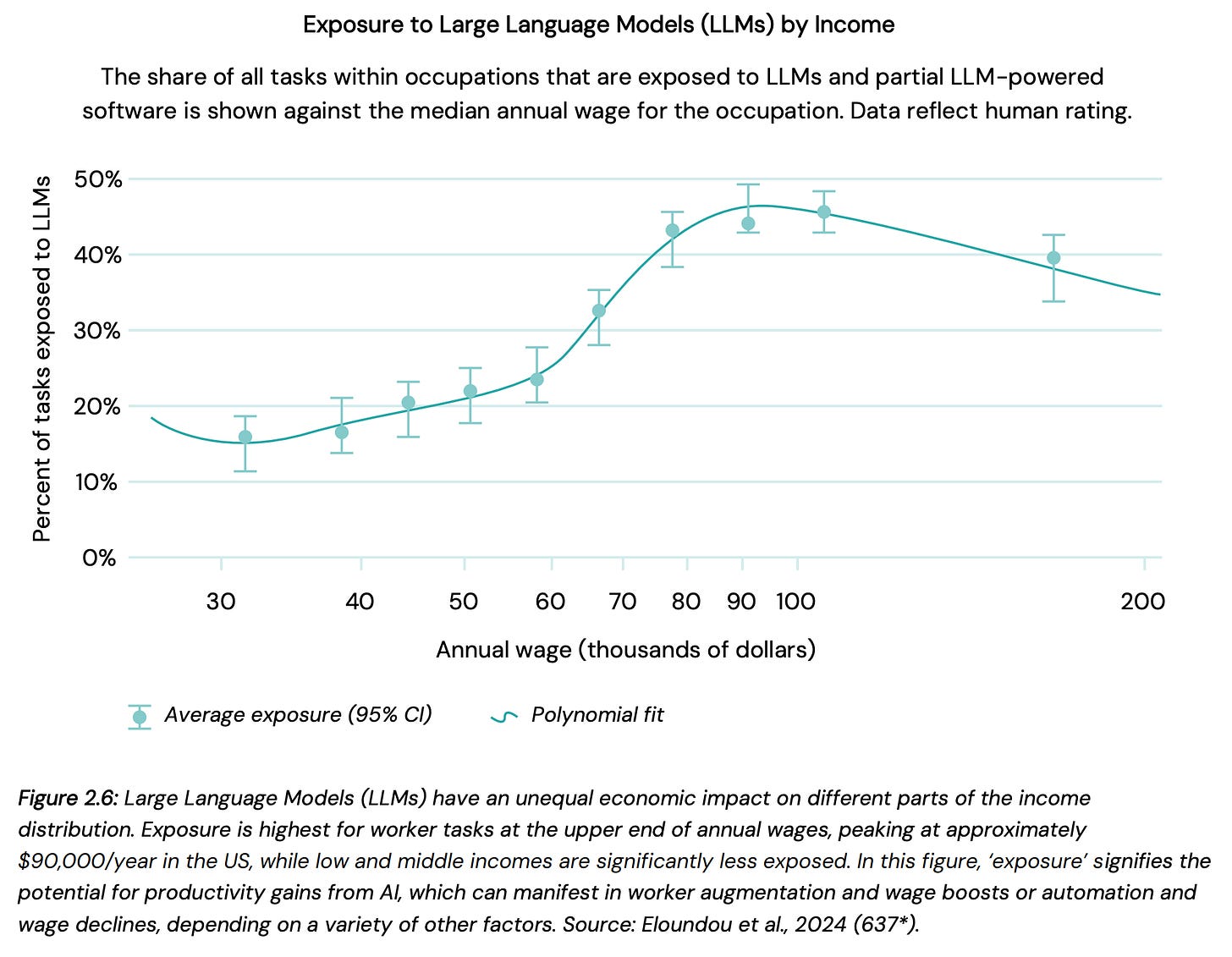

According to the AI Safety Report , in advanced economies, up to 60% of current jobs could be affected by today's GPAI systems. But here’s the thing: while some jobs will disappear, others will emerge.

But honestly, this is not the most interesting part. What really caught my attention is how GPAI could reshape income inequality.

In our earlier episode, "AI as Great Equalizer," we explored how AI has the potential to narrow the gap between top performers and those still developing their skills.

This idea is now even further reinforced by many other researchers! And guess what? They found that general-purpose AI could reduce wage inequality! Is this good or bad? Depends on your occupation and salary (heh).

But let's get serious for a moment. The research highlights three major trends:

1. AI Might Reduce Wage Inequality Within Certain Jobs

If you’re in a cognitive-task-oriented or knowledge-based occupation, good news: AI might actually work in your favor – especially if you’re not a top earner just yet. Studies suggest that less experienced workers or those with more elementary skill sets often see the largest productivity boosts from adopting AI. This could shrink wage gaps within certain professions. So, if you’re in a job where AI can assist you to do repetitive or detail-driven cognitive tasks faster, you might actually climb the income ladder faster than your higher-earning colleagues.

2. But It’s Likely to Increase Inequality Across Occupations

The flip side: the overall gap between well-paid and lower-paid occupations might actually widen. Simulations show that AI could drive a 10% increase in wage inequality between high- and low-income occupations in advanced economies in just a decade. Why? General-purpose AI tools tend to deliver the biggest rewards in terms of productivity and income to people in high-paying, specialized roles (think executives, tech specialists, and creative leads). Meanwhile, workers in lower-paying jobs without much room for AI-driven productivity gains, or in roles that are outright automated, could see their wages stagnate or even shrink.

3. The Capital vs. Labor Divide Could Worsen

This is perhaps the biggest red flag. Historically, automation has tended to reduce the share of economic income that goes to workers while increasing returns for capital owners (the folks who own businesses, factories, and now algorithms). AI could just supercharge this divide: as more work gets automated and capital — not workers — drives the biggest productivity gains, the wealthy few who already dominate capital ownership might capture an even larger piece of the economic pie at the expense of everyone else.

4. A Widening Gap Between Countries

On a global scale, high-income countries (HICs) appear better equipped to adopt and benefit from AI than low- and middle-income countries (LMICs). HICs boast robust digital infrastructure, highly skilled workforces, and well-developed innovation ecosystems. This makes them prime candidates to reap the rewards of AI-driven productivity growth. This divergence could lead to an even wider income gap between rich and poor nations.

Will AI take your job? Maybe not. But what it will do is change the landscape of how we work and who benefits. After all, love it or hate it, AI is coming for all of us — just not in the same way (The Great Equalizer).

Final Thoughts: What Should We Worry About Most?

We can talk about AI risks all day – job automation, deepfake scandals, ethical concerns – but if I had to prioritize survival, I’d focus on these two problems:

AI-facilitated biological weapons and autonomous warfare;

and AI bias shaping global beliefs;

Everything else? Important, yes. But these two are the ones that could define whether AI empowers us or controls us.

And if you're still wondering about AGI? Well, The International AI Safety Report mentioned it exactly two times – once in the main text, and once again in the glossary. That’s it. That’s how little the actual experts care about this overhyped, vague, sci-fi term. Instead, they’ve made it clear:

General-Purpose AI (GPAI) is the real deal, and it’s here now.

AI has already outperformed humans in key areas, it’s already rewiring our digital ecosystem, and it’s already changing the way we make decisions.

That said, for the rest of the points, I largely agree with the executive summary of the AI Safety Report. It’s a solid, well-balanced take on where AI research stands today.

If there’s one takeaway from this, it’s this: We're entering an era where two forms of intelligence will coexist on Earth.

Not because we finally built that mythical AGI, but because we've finally started seeing AI for what it really is – a powerful, general-purpose technology that's already transforming our world.

On that note, I wish you all a happy futuristic future, and I'll see you next time!

🔎 Explore more: